Objectives ⬇

- Describe cognitive science.

- Assess how the human mind processes and generates information and knowledge.

- Explore cognitive informatics.

- Examine artificial intelligence and its relationship to cognitive science and computer science.

Key Terms ⬆ ⬇

Introduction ⬆ ⬇

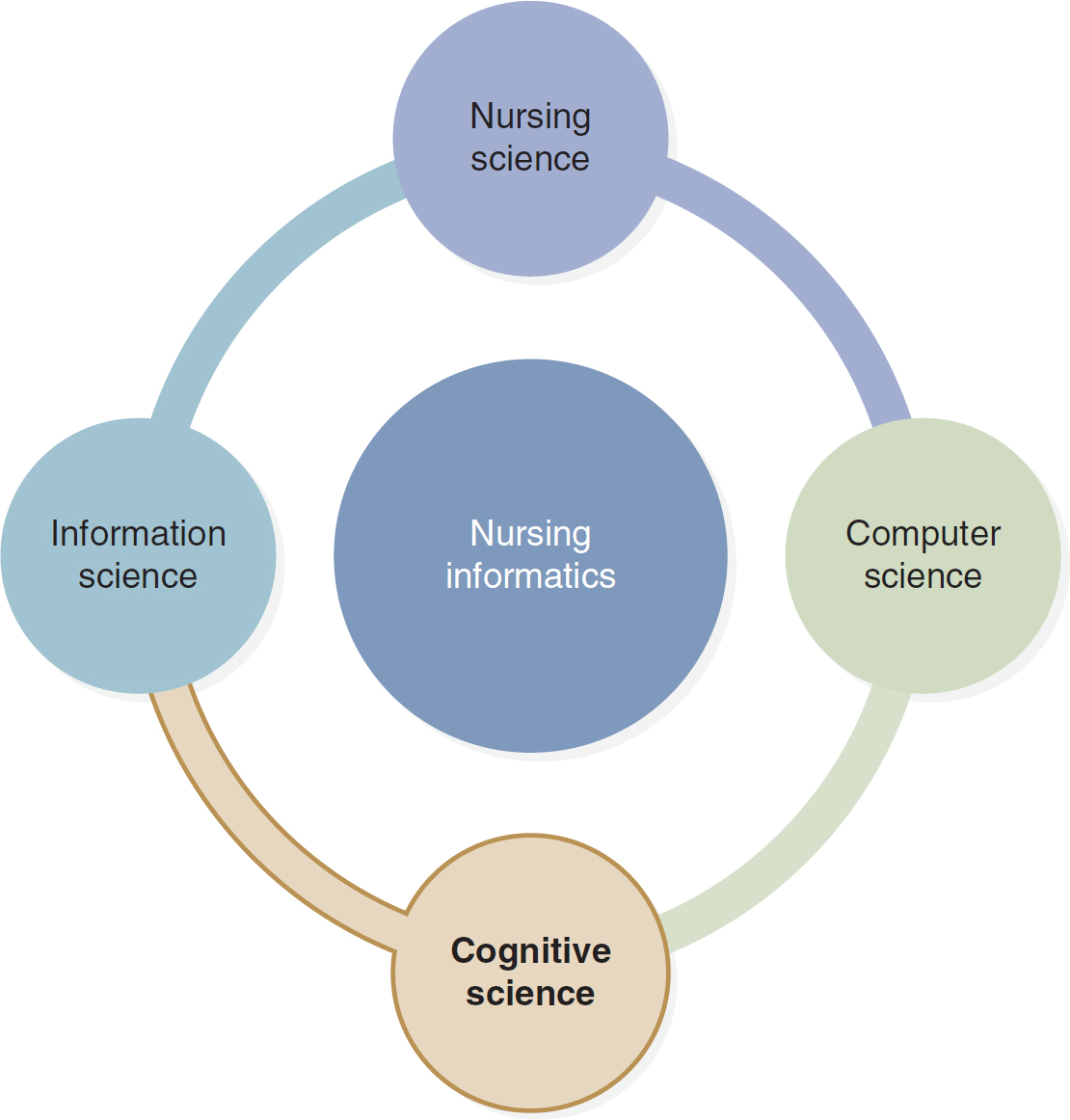

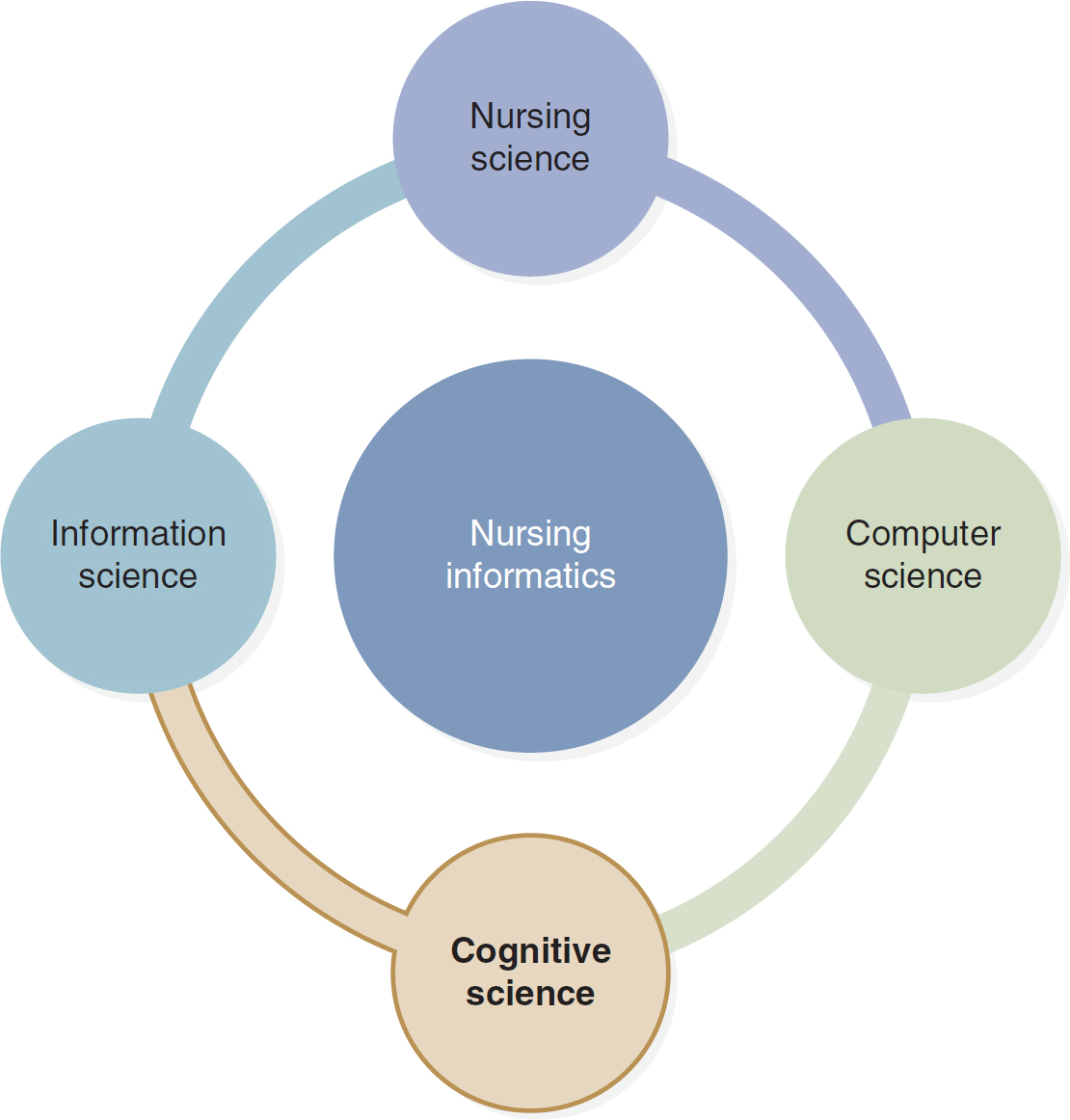

Cognitive science is the fourth of the four basic building blocks used to understand informatics (Figure 4-1). The Building Blocks of Nursing Informatics section began by examining nursing science, information science, and computer science and by considering how each science relates to and helps the reader understand informatics. This chapter explores the critical aspects of cognitive science and how it supports cognitive informatics (CI) and artificial intelligence (AI).

Figure 4-1 Building Blocks of Nursing Informatics

The four building blocks of nursing informatics include nursing science, computer science, cognitive science, and information science.

Throughout the centuries, cognitive science has intrigued philosophers and educators alike. Beginning in Greece, the ancient philosophers sought to comprehend how the mind works and the nature of knowledge. This age-old quest to unravel the processes inherent in the working brain has been undertaken by some of the greatest minds in history. However, it was only about 50 years ago that computer operations and actions were linked to cognitive science, meaning theories of the mind, intellect, or brain. This association led to the expansion of cognitive science to examine the complete array of cognitive processes, from lower-level perceptions to higher-level critical thinking, logical analysis, and reasoning.

The focus of this chapter is the impact of cognitive science on nursing informatics (NI). This section provides the reader with an introduction and overview of cognitive science; the nature of knowledge, wisdom, and AI as they apply to the Foundation of Knowledge model; and NI. The applications to NI include problem-solving, decision support systems, usability issues, user-centered interfaces and systems, and the development and use of terminologies.

Cognitive Science ⬆ ⬇

The interdisciplinary field of cognitive science studies the mind, intelligence, and behavior from an information-processing perspective. H. Christopher Longuet-Higgins originated the term cognitive science in his 1973 commentary on the Lighthill report, which pertained to the state of AI research at that time. The Cognitive Science Society and the Cognitive Science Journal date back to 1980. The interdisciplinary base of cognitive science arises from psychology, philosophy, neuroscience, computer science, linguistics, biology, and physics. Its focus covers memory, attention, perception, reasoning, language, mental ability, and computational models of cognitive processes. The science explores the nature of the mind, knowledge representation, language, problem-solving, decision-making, and the social factors influencing the design and use of technology. Simply put, cognitive science is the study of the mind and how information is processed in the mind. As described in the Stanford Encyclopedia of Philosophy (Thagard, 2023):

The central hypothesis of cognitive science is that thinking can best be understood in terms of representational structures in the mind and computational procedures that operate on those structures. While there is much disagreement about the nature of the representations and computations that constitute thinking, the central hypothesis is general enough to encompass the current range of thinking in cognitive science, including connectionist theories which model thinking using artificial neural networks. (para. 109)

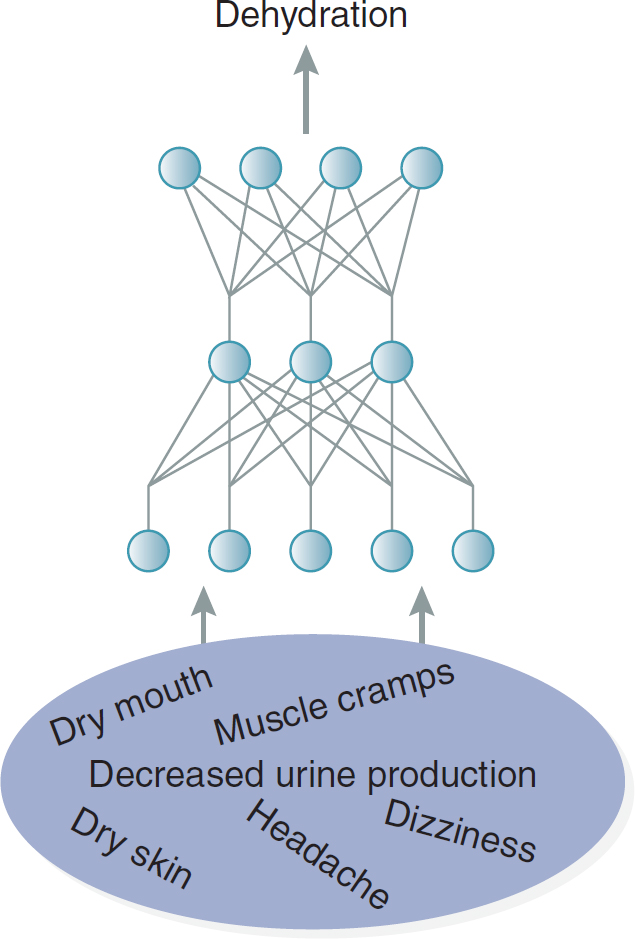

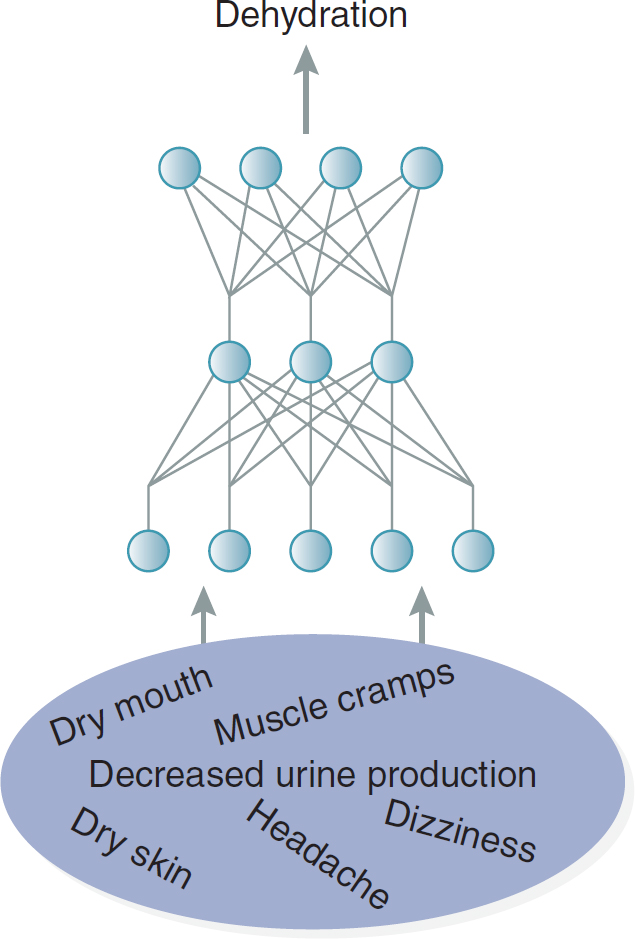

Connectionism is a component of cognitive science that uses computer modeling through artificial neural networks to explain human intellectual abilities. Neural networks can be thought of as interconnected simple processing devices or simplified models of the brain and nervous system that consist of a considerable number of elements or units (i.e., analogs of neurons) linked together in a pattern of connections (i.e., analogs of synapses). A neural network that models the entire nervous system would have three types of units: (1) input units (i.e., analogs of sensory neurons), which receive information to be processed; (2) hidden units (i.e., analogs to all the other neurons, not sensory or motor), which work in between input and output units; and (3) output units (i.e., analogs of motor neurons), where the outcomes, or results of the processing, are found.

Connectionism (Figure 4-2) is rooted in how computation occurs in the brain and nervous system, or biological neural networks. On their own, single neurons have minimal computational capacity. When interconnected with other neurons, however, they have immense computational power. The connectionism system learns by modifying the connections linking the neurons. Artificial neural networks are unique computer programs designed to simulate their biological analogs, the neurons of the brain.

Figure 4-2 Connectionism

The concept of connectionism features a network of interconnected elements leading to dehydration. The elements are the symptoms of dehydration, including dry mouth, muscle cramps, decreased urine production, dry skin, headache, and dizziness.

The mind is frequently compared to a computer, and experts in computer science strive to understand how the mind processes data and information. In contrast, experts in cognitive science model human thinking using artificial networks provided by computers, an endeavor sometimes referred to as AI. How does the mind process all of the inputs received? Which items and in which ways are things stored or placed into memory, accessed, augmented, changed, reconfigured, and restored? Cognitive science provides the scaffolding for the analysis and modeling of complicated, multifaceted human performance and has a tremendous effect on the issues affecting informatics.

The end user is the focus of this activity because the concern is with enhancing performance in the workplace; in nursing, the end user could be the actual clinician in the clinical setting, and cognitive science can enhance the integration and implementation of the technologies being designed to facilitate this knowledge worker, with the ultimate goal of improving patient care delivery. Technologies change rapidly, and this evolution must be harnessed for the clinician at the bedside. To do this at all levels of nursing practice, one must understand the nature of knowledge, the information and knowledge needed, and the means by which the nurse processes this information and knowledge in the situational context.

Sources of Knowledge ⬆ ⬇

Just as philosophers have questioned the nature of knowledge, so too have they strived to determine how knowledge arises because the origins of knowledge can help us understand its nature. How do people come to know what they know about themselves, others, and their world? There are many viewpoints on this issue, both scientific and nonscientific.

Three sources of knowledge have been identified: (1) instinct, (2) reason, and (3) intuition. Instinct is when one reacts without reason, such as when a car is heading toward a pedestrian and they jump out of the way without thinking. Instinct is found in both humans and animals, whereas reason and intuition are found only in humans. Reason “[c]ollects facts, generalizes, reasons out from cause to effect, from effect to cause, from premises to conclusions, from propositions to proofs” (Sivananda, 2017, para. 4). Intuition is a way of acquiring knowledge that cannot be obtained by inference, deduction, observation, reason, analysis, or experience. Intuition was described by Aristotle as “[a] leap of understanding, a grasping of a larger concept unreachable by other intellectual means, yet fundamentally an intellectual process” (Shallcross & Sisk, n.d.).

Some believe that knowledge is acquired through perception and logic. Perception is the process of acquiring knowledge about the environment or situation by obtaining, interpreting, selecting, and organizing sensory information from seeing, hearing, touching, tasting, and smelling. Logic is a discipline that relies on reasoned action or drawing inferences or conclusions based on premises or what you know from finding new data and information by analyzing and synthesizing if other known data and information are true. Acquiring knowledge through logic requires reasoned action to make valid inferences.

The sources of knowledge provide a variety of inputs, throughputs, and outputs through which knowledge is processed. No matter how you believe knowledge is acquired, it is important to be able to explain or describe those beliefs, communicate those thoughts, enhance shared understanding, and discover the nature of knowledge.

Nature of Knowledge ⬆ ⬇

Epistemology is the study of the nature and origin of knowledge-that is, what it means to know. Everyone has a conception of what it means to know based on their own perceptions, education, and experiences; knowledge is a part of life that continues to grow with the person. Thus, a definition of knowledge is somewhat difficult to agree on because it reflects the viewpoints, beliefs, and understandings of the person or group defining it. Some people believe that knowledge is part of a sequential learning process that resembles a pyramid, with data on the bottom, rising to information, then knowledge, and finally wisdom. Others believe that knowledge emerges from interactions and experience with the environment, and still others think that it is religiously or culturally bound. Knowledge acquisition is thought to be an internal process derived through thinking and cognition or an external process from senses, observations, studies, and interactions. Descartes's important premise “called ‘the way of ideas' represents the attempt in epistemology to provide a foundation for our knowledge of the external world (as well as our knowledge of the past and of other minds) in the mental experiences of the individual” (Martinich & Stroll, 2023, para. 4).

For the purpose of this text, knowledge is defined as the awareness and understanding of a set of information and ways that information can be made useful to support a specific task or arrive at a decision. It abounds with others' thoughts and information or consists of information that is synthesized so that relationships are identified and formalized.

How Knowledge and Wisdom Are Used in Decision-Making ⬆ ⬇

The reason for collecting and building data, information, and knowledge is to be able to make informed, judicious, prudent, and intelligent decisions. When you consider the nature of knowledge and its applications, you must also examine the concept of wisdom. Wisdom has been defined in numerous ways:

- Knowledge applied in a practical way or translated into actions

- The use of knowledge and experience to heighten common sense and insight to exercise sound judgment in practical matters

- The highest form of common sense resulting from accumulated knowledge or erudition (i.e., deep, thorough learning) or enlightenment (i.e., education that results in understanding and the dissemination of knowledge)

- The ability to apply valuable and viable knowledge, experience, understanding, and insight while being prudent and sensible

- Focused on our own minds

- The synthesis of our experience, insight, understanding, and knowledge

- The appropriate use of knowledge to solve human problems

In essence, wisdom entails knowing when and how to apply knowledge. The decision-making process revolves around knowledge and wisdom. It is through efforts to understand the nature of knowledge and its evolution to wisdom that one can conceive of, build, and implement informatics tools that enhance and mimic the mind's processes to facilitate decision-making and job performance.

Cognitive Informatics ⬆ ⬇

Wang (2003) described cognitive informatics (CI) as an emerging transdisciplinary field of study that bridges the gap in understanding regarding how information is processed in the mind and in the computer. Computing and informatics theories can be applied to help elucidate the information processing of the brain, and cognitive and neurological sciences can likewise be applied to build better and more efficient computer processing systems. Wang suggested that the common issue among the human knowledge sciences is the drive to develop an understanding of natural intelligence and human problem-solving.

Patel et al. (2014) defined CI as “the multidisciplinary study of cognition, information and computational sciences that investigates all facets of human computing including design and computer-mediated intelligent action, thus is strongly grounded in methods and theories from cognitive science” (para. 1). CI helps to bridge this gap by systematically exploring the mechanisms of the brain and mind and specifically how information is acquired, represented, remembered, retrieved, generated, and communicated. This dawning of understanding can then be applied and modeled in AI situations, which will then result in more efficient computing applications.

Wang (2003) explained further:

Cognitive informatics attempts to solve problems in two connected areas in a bidirectional and multidisciplinary approach. In one direction, CI uses informatics and computing techniques to investigate cognitive science problems, such as memory, learning, and reasoning; in the other direction, CI uses cognitive theories to investigate the problems in informatics, computing, and software engineering. (p. 120)

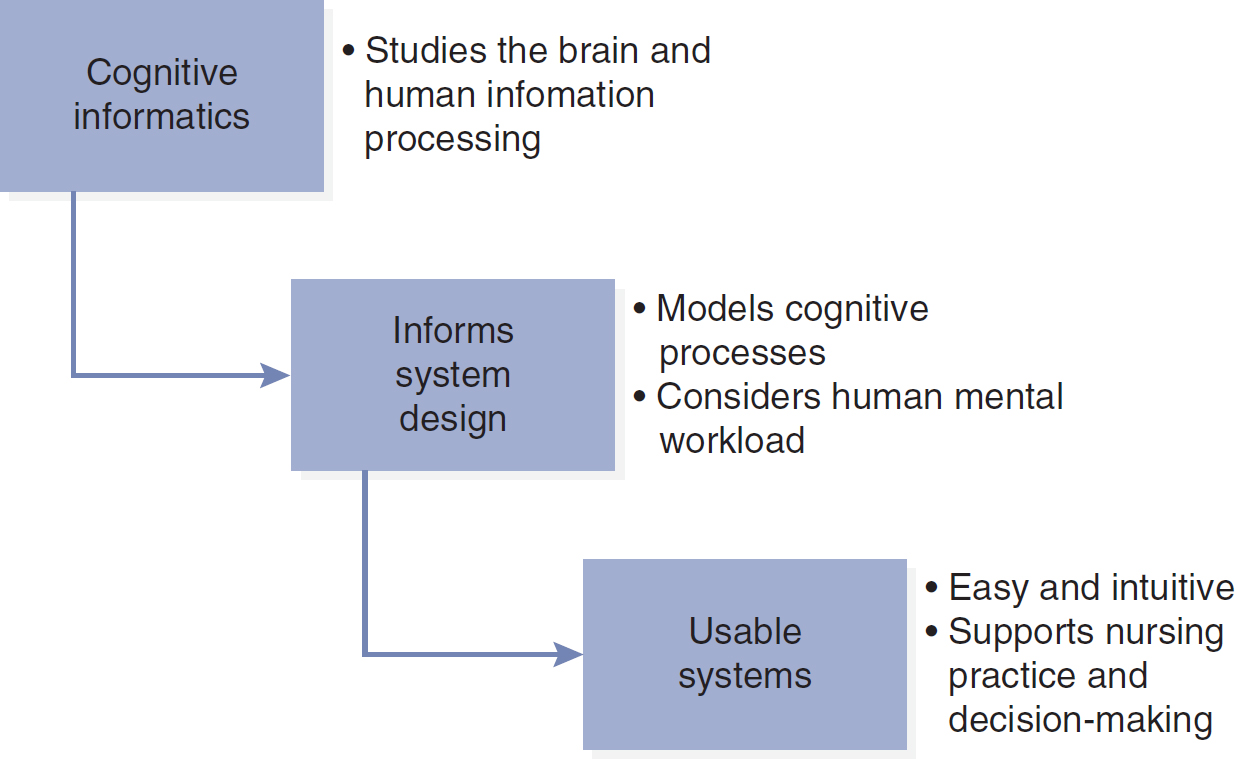

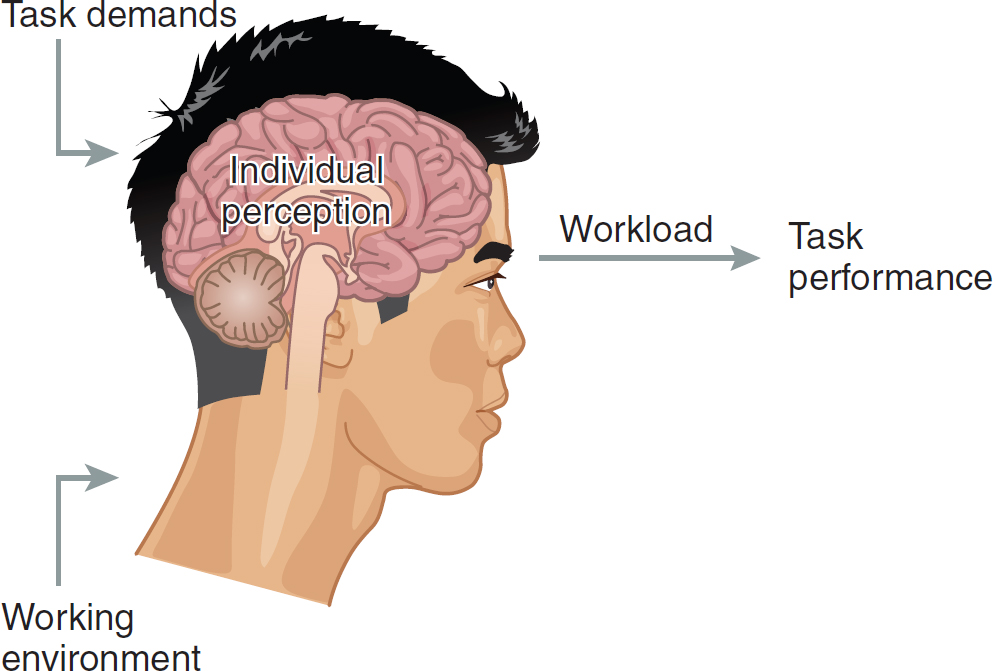

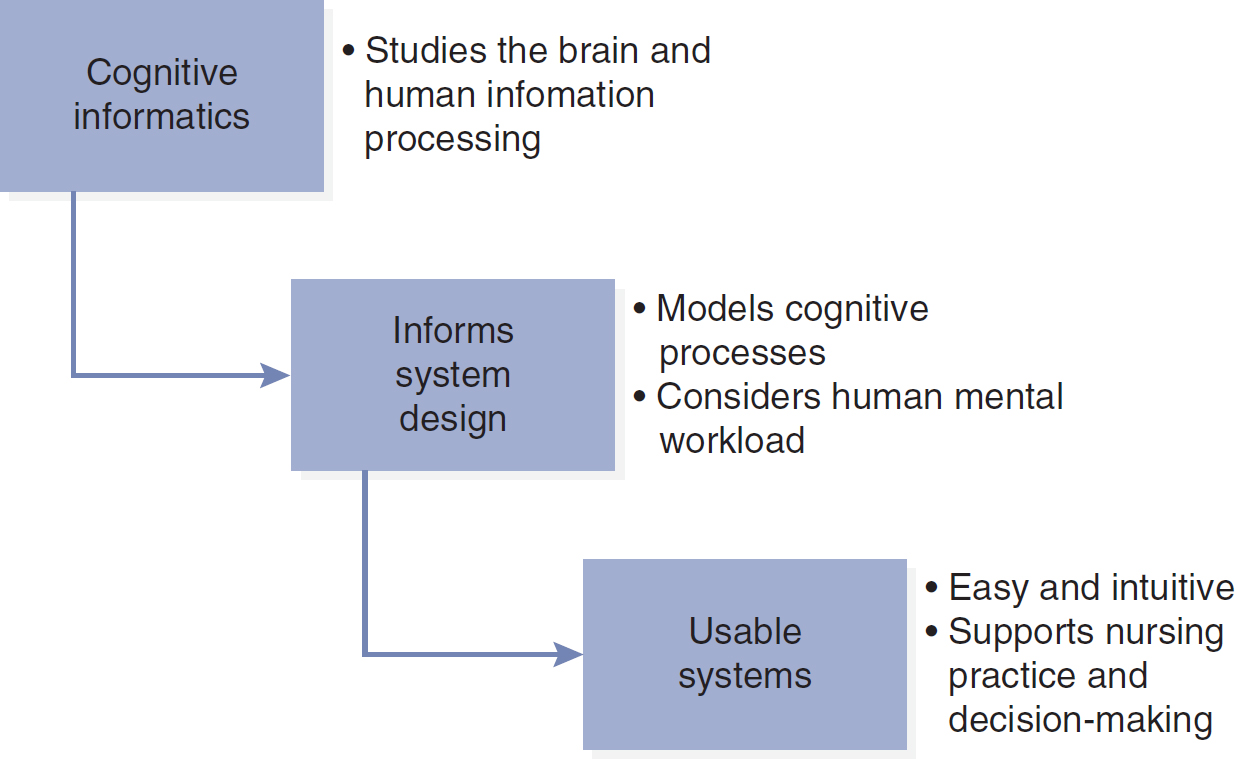

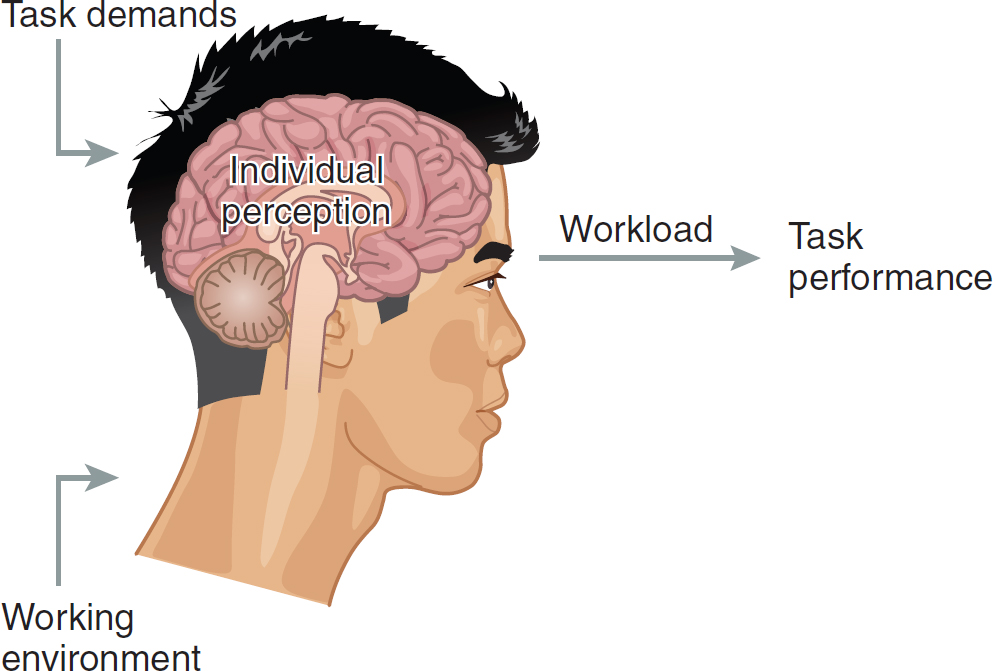

Principles of cognitive informatics and an understanding of how humans interact with computers can be used to build information technology (IT) systems that better meet the needs of users (Figure 4-3). If a system is too complex or taxing for users, they are likely to resist its use. The National Center for Cognitive Informatics and Decision Making in Healthcare (NCCD) is funded by the Office of the National Coordinator for Health IT (ONC) under the Strategic Health IT Advanced Research Projects (SHARP) program. The NCCD was established to respond to the cognitive challenges in health IT and to “bring together a collaborative, interdisciplinary team of researchers across the nation with the highest level of expertise in patient centered cognitive support research” (SHARP, 2010, p. 6). Similarly, Longo (2015) emphasized human mental workload (MWL) as a key component in effective system design (Figure 4-4). He stated the following:

Figure 4-3 Cognitive Informatics Leads to Usable Systems

A flow diagram depicts the relationship between cognitive informatics and usable systems.

The sequence from cognitive informatics to usable systems unfolds in the following manner. 1. Cognitive informatics: Focuses on studying the brain and human information processing. 2. Informs system design: Involves modeling cognitive processes and considering human mental workload. 3. Usable systems: Result in designs that are easy and intuitive, supporting nursing practice and decision-making.

Figure 4-4 Human Mental Workload

An illustration of a person's head featuring the brain with individual perception elucidates the concept of mental workload. The concept implies that task demands and working environment influences workload, which in turn impacts task performance.

At a low level of MWL, people may often experience annoyance and frustration when processing information. On the other hand, a high level can also be both problematic and even dangerous, as it leads to confusion, decreases performance in information processing and increases the chances of errors and mistakes. (p. 758)

Cognitive Informatics and Nursing Practice ⬆ ⬇

According to Mastrian (2008), the recognition of the potential application of principles of cognitive science to NI is relatively new. The traditional and widely accepted definition of NI advanced by Graves and Corcoran (1989) is that NI is a combination of nursing science, computer science, and information science used to describe the processes nurses employ to manage data, information, and knowledge in nursing practice. Turley (1996) proposed the addition of cognitive science to this mix because nurse scientists are seen to strive to capture and explain the influence of the human brain on data, information, and knowledge processing and to elucidate how these factors in turn affect nursing decision-making. The need to include cognitive sciences is imperative as researchers attempt to model and support nursing decision-making in complex computer programs.

In 2003, Wang proposed the term cognitive informatics to signify the branch of information and computer sciences that investigates and explains information processing in the human brain. The science of CI grew out of interest in AI, based on computer scientists developing computer programs that mimic the information processing and knowledge generation functions of the human brain. CI bridges the gap between artificial and natural intelligence and enhances the understanding of how information is acquired, processed, stored, and retrieved so that these functions can be modeled in computer software.

What does CI have to do with nursing? At its very core, nursing practice requires problem-solving and decision-making. Nurses help people manage their responses to illnesses and identify ways that patients can maintain or restore their health. During the nursing process, nurses must first recognize that there is a problem to be solved and then they must identify the nature of the problem, pull information from knowledge stores that is relevant to the problem, decide on a plan of action, implement the plan, and evaluate the effectiveness of the interventions. When nurses have practiced the science of nursing for some time, they tend to do these processes automatically; they instinctively know what needs to be done to intervene in the problem. What happens, however, if nurses face a situation or problem for which they have no experience on which to draw? The ever-increasing acuity and complexity of patient situations, coupled with the explosion of information in health care, has fueled the development of decision support software embedded in the electronic health record. This software models the human and natural decision-making processes of professionals in an artificial program. Such systems can help decision-makers to consider the consequences of different courses of action before implementing one of them. They also provide stores of information that users may not be aware of that they can use to choose the best course of action and ultimately make a better decision in unfamiliar circumstances.

Decision support programs continue to evolve as research in the fields of cognitive science, AI, and CI is continuously generated and then applied to the development of these systems. Nurses must embrace-not resist-these advances as support and enhancement of the practice of nursing science. Patel and Kannampallil (2015) pointed out the following:

CI plays a key role-both in terms of understanding, describing and predicting the nature of clinical work activities of its participants (e.g., clinicians, patients, and lay public) and in terms of developing engineering and computing solutions that can improve clinical practice (e.g., a new decision-support system), patient engagement (e.g., a tool to remind patients of their medication schedule), and public health interventions (e.g., a mobile application to track the spread of an epidemic). (p. 4)

What Is AI? ⬆ ⬇

The field of AI deals with the conception, development, and implementation of informatics tools based on intelligent technologies. This field attempts to mimic the complex processes of human thought and intelligence, using machines.

The challenge of AI use in healthcare rests in capturing, mimicking, and creating the complex processes of the mind in informatics tools, including software, hardware, and other machine technologies, with the goal that the tool be able to initiate and generate its own mechanical thought processing. The brain's processing is highly intricate and complicated. AI uses cognitive science and computer science to replicate and generate human intelligence. A self-driving car is an example of an AI system that collects, processes, and uses data to make decisions. In health care, AI systems gather and process data and make predictions of health risk and suggestions for care based on the available evidence. Examples of AI use in health care include diagnostic technologies and clinical decision support. As Luber (2011) explained, AI tools cannot be preprogramed to anticipate and respond to specific situations because of the variability and complexity of situations. “Instead, an intelligent agent must be equipped with the ability to make decisions based on the information it has, and re-evaluate its past solutions to improve future decisions” (p. 3). This field has evolved and has produced artificially intelligent tools to enhance nurses' personal and professional lives. According to Columbia University (n.d.), “[N]ow more than ever, there is unparalleled promise for what AI in health care can achieve in saving and improving lives” (para. 1). However, AI is not likely to completely replace the human functions in health care because of the complexities associated with and the emotional aspects of health care. The American Medical Association has suggested the use of the term augmented intelligence to emphasize the assistive role of AI in enhancing human intelligence (www.ama-assn.org/amaone/augmented-intelligence-ai).

Those involved in developing AI do not fully understand what needs to be solved, and facets get overlooked. The complexity of healthcare challenges results in AI agents that do not perform well in the healthcare arena. The natural language processing (NLP) systems are still not where they need to be, especially at the provider-patient point of care, requiring the need for translators and multilingual nurses and doctors so that miscommunication or lack of communication does not disrupt care, especially in forming the patient-nurse and patient-doctor relationship, diagnosing, treating, and educating the patient (Columbia University, n.d.). It has been suggested that doctors, nurses, and other healthcare workers will not be replaced due to the need for touching patients while integrating the best technologies to improve patient outcomes (Baxter, 2019). Machine learning (ML) is becoming an important part of AI usage in health care. ML is a subset of AI in that machines mine vast amounts of data from various sources to identify patterns and make predictions about the data or decisions without human intervention. The data analysis outputs of ML are predictive, proactive, and preventive; they can augment and evolve the work of nurses and doctors but not replace them. Some people thought that radiologists would be replaced by AI. Instead, healthcare professionals are able to make intelligent and informed decisions through algorithmic support that meticulously and precisely processes massive amounts of data efficiently at an incredible speed and identifies patterns that were previously undetected (Columbia University, n.d.; Oracle, n.d.). The healthcare team can receive updates and analytics that are synthesized into reports, which not only decreases their workloads but also allows them to proactively care for their patients. For example, the reports can highlight primary levels of prevention issues they need to address with specific patients under their care. This is a good illustration of augmented intelligence.

AI in the Future

As electronic health records become more ubiquitous and we have access to physiological data streamed in real time, we will have the potential to process vast amounts of data using AI tools, and we will begin to see data analytics that will enable machine processing that far exceeds the capabilities of the human mind. AI is already transforming how healthcare professionals practice and the patient experience. Deloitte (n.d.) stated that “[w]hile each AI technology can contribute significant value alone, the larger potential lies in the synergies generated by using them together across the entire patient journey, from diagnoses, to treatment, to ongoing health maintenance” (para. 8). As AI continues to evolve, we will realize greater patient engagement, improved patient outcomes and quality of care, and the personalization of the levels of prevention.

Summary ⬆ ⬇

Cognitive science is the interdisciplinary field that studies the mind, intelligence, and behavior from an information-processing perspective. CI is a field of study that bridges the gap in understanding regarding how information is processed in the mind and in the computer. Computing and informatics theories can be applied to help elucidate the information processing of the brain, and cognitive and neurological sciences can likewise be applied to build better and more efficient computer-processing systems.

AI is the field that deals with the conception, development, and implementation of informatics tools based on intelligent technologies. This field captures the complex processes of human thought and intelligence. AI uses cognitive science and computer science to replicate and generate human intelligence. More robust AI systems leveraging ML and deep learning techniques are likely to have a tremendous effect on health care in the future.

Clinicians must harness the sources of knowledge, the nature of knowledge, and the rapidly changing technologies to enhance their bedside care. Therefore, we must understand the nature of knowledge and knowledge processing, the information and knowledge needed, and the means by which nurses and machines process this information and knowledge in their own situational context. The reason for collecting and building data, information, and knowledge is to be able to build wisdom-that is, the ability to apply valuable and viable knowledge, experience, understanding, and insight while being prudent and sensible. Wisdom is focused on our own minds, the synthesis of our experience, insight, understanding, and knowledge. Nurses must use their wisdom and make informed, judicious, prudent, and intelligent decisions while providing care to patients, families, and communities. Cognitive science, CI, and AI will continue to evolve to help build knowledge and wisdom.

| Thought-Provoking Questions |

|---|

- How would you describe CI? Reflect on a plan of care that you have developed for a patient. How could CI be used to create tools to help with or support this important work?

- Think of a clinical setting with which you are familiar and envision how AI tools might be applied in this setting. Are there any current tools in use? Which current or emerging tools would enhance practice in this setting and why?

- Use your creative mind to think of a tool of the future based on CI that would support your practice.

|

References ⬆

- Baxter M. (2019, September 11). Forget AI in healthcare, augmented intelligence and distributed ledger technology will transform healthcare. Information Age. www.information-age.com/augmented-intelligence-distributed-ledger-technology-healthcare-123485246

- Columbia University. (n.d.). The future of artificial intelligence in health care.https://ai.engineering.columbia.edu/ai-applications/ai-in-health-care

- Deloitte. (n.d.). The future of artificial intelligence in health care.www2.deloitte.com/us/en/pages/life-sciences-and-health-care/articles/future-of-artificial-intelligence-in-health-care.html

- Graves J., & Corcoran S. (1989). The study of nursing informatics. Image: Journal of Nursing Scholarship, 21(4), 227-230. https://doi.org/10.1111/j.1547-5069.1989.tb00148.x

- Longo L. (2015). A defeasible reasoning framework for human mental workload representation and assessment. Behaviour & Information Technology, 34(8), 758-786. https://doi.org/10.1080/0144929X.2015.1015166

- Longuet-Higgins H. C. (1973). Comments on the Lighthill report and the Sutherland reply. Chilton Computing. www.chilton-computing.org.uk/inf/literature/reports/lighthill_report/p004.htm

- Luber S. (2011). Cognitive science artificial intelligence: Simulating the human mind to achieve goals. 3rd International Conference on Computer Research and Development, Shanghai, China (pp. 207-210). http://dx.doi.org/10.1109/ICCRD.2011.5764005

- Martinich A. P., & Stroll A. (2023). Epistemology. In Encyclopedia Britannica. www.britannica.com/topic/epistemology

- Mastrian K. (2008, February). Invited editorial: Cognitive informatics and nursing practice. Online Journal of Nursing Informatics, 12(1). http://ojni.org/12_1/kathy.html

- Patel V., & Kannampallil T. (2015). Cognitive informatics in biomedicine and healthcare. Journal of Biomedical Informatics, 53, 3-14. https://doi.org/10.1016/j.jbi.2014.12.007

- Patel V., Kaufman D., & Cohen T. (2014). Cognitive informatics in health and biomedicine: Case studies on critical care, complexity and errors. Springer. https://doi.org/10.1007/978-1-4471-5490-7

- Shallcross D. J., & Sisk D. A. (n.d.). What is intuition? Hyponoetics Glossary. www.hyponoesis.org/Glossary/Intuition/Intuition

- Sivananda S. (2017). The four sources of knowledge. The Divine Life Society. www.dlshq.org/messages/knowledge.htm

- Strategic Health IT Advanced Research Projects. (2010). Area 2: Patient-centered cognitive support.http://informatics.mayo.edu/sharp/Images/8/82/SHARP-C_overviews.ppt.pdf

- Thagard P. (2023, January 21). Cognitive science. In Stanford encyclopedia of philosophy. http://plato.stanford.edu/entries/cognitive-science

- Turley J. (1996). Toward a model for nursing informatics. Image: Journal of Nursing Scholarship, 28(4), 309-313. https://doi.org/10.1111/j.1547-5069.1996.tb00379.x

- Wang Y. (2003). Cognitive informatics: A new transdisciplinary research field. Brain and Mind, 4(2), 115-127. https://doi.org/10.1023/A:1025419826662