Objectives ⬇

- Describe reality.

- Differentiate between virtual reality, augmented reality, mixed reality, and extended reality.

- Assess how informatics supports and evolves the realities.

- Explore various realities to contemplate and critically think about in relation to your current reality and foreseeable realities while imagining your future realities.

- Assess the relationship between Internet of Things (IoT), Intelligence of Things (IoT), and Artificial Intelligence of Things (AIoT) and reality.

- Explore the effect of big data, robotics, and smart devices on our professional reality as well as on our patients' realities.

- Describe the concepts of AI and the metaverse in relation to reality.

- Assess the effect of the metaverse on our personal and professional lives.

- Contemplate the effect of the metaverse on our patients, their care, and the healthcare delivery system.

- Explore the concept of the metaverse and evaluate its future promise regarding nursing and nursing informatics

Key Terms ⬆ ⬇

Introduction ⬆ ⬇

We wrote this chapter to give you pause to think about nursing in the context of informatics and, of course, reality. We designed it to challenge your foundation of knowledge and your reality. As you read this chapter, take the time to reflect on your own situational contexts, both personally and professionally.

You have expanded your information and knowledge about nursing informatics and how it affects practice and patient care. Now please consider informatics in relation to your reality.

Reality refers to the world or the state of things as they actually exist. When contemplating reality, is one person's reality different from another person's reality? If so, in what ways? Do people's experiences and education affect how they view the world or experience reality? If those questions do not pique your interest, perhaps this one will. When people enter virtual reality (VR), does that experience then become their reality, or do they suspend reality to enter fantasy? Augmented reality (AR) is an enhancement of people's reality. Does AR become their reality, or do they distinguish the changes as those that are added to their reality? If virtual, augmented, and actual realities are combined, what is real to the person?

Overview: Informatics and the Realities ⬆ ⬇

Being able to distill big data into patterns and predictive models, along with the genome reality, has affected the way patients are treated; true patient-centric treatments and individualized plans of care can now be developed since the rise of personalized medicine. Here are some of the advancements that have enhanced patient care and transformed the healthcare reality:

- Exploring the patient's own anatomy using VR prior to surgery

- Seeing through the skin to illuminate veins for blood draws

- Using decision support technologies

- Using artificial intelligence (AI) for making decisions and interpreting diagnostic tests, analyses, and diagnoses

- Planning treatments

- Integrating smart devices

- Distilling massive amounts of data and research into meaningful doses

- Prescribing

- Assigning and revising adaptive learning modules based on the individual's learning cues

- Connecting through global telehealth

- Using robots

- Providing VR distraction for painful procedures

Informatics will continue to support the need to apply the information and technology garnered from experience to advancing developments. As technology continues to evolve, nurses will see different uses for that technology and its integration into mainstream health care and the educational arena. Informatics, which shapes the ubiquitous integration of technology, will continue to transform and alter nurses' reality.

Nursing Informatics and the Realities ⬆ ⬇

Nursing informatics has enhanced the current reality of providing direct patient care while beginning to transect the realities of life given the current technological capabilities. From implementing voice recognition to improving the ability to interface with devices to entering total VR environments, technology continues to affect the nursing reality. In the healthcare arena, technology continues to push the envelope and challenge paradigms and abilities. Those who adopt and adapt the latest technologies available will continue to transform health care and provide patients with the most advanced care techniques.

The current technological changes pose tremendous challenges to and place great expectations on those educating the future healthcare workforce. Faculties must be early adopters and keep their students abreast of the technological advances made in health care and other sectors that will affect patient care. Future care providers, researchers, administrators, informaticists, and educators must be able to frame the use of technology. Practitioners must constantly strive to stay current with an eye to the future so that they are implementing the best technologies to improve patient care. Lifelong learning must not only be a search for knowledge but also a way to stay informed about new technologies and learn how to implement them. Going one step further, practitioners must use their new knowledge to better understand the technologies and, through their ideas, extend the capabilities of the technologies.

Nursing informaticists are engaged in evaluating and choosing technologies. They are responsible for tailoring functionality and establishing end user conditions and requirements. In addition, they are relied on to deploy the technologies and prepare users by designing and delivering the necessary training. As nursing informatics informs and expands nurses' knowledge and technology use, their reality is being affected as well as the reality of the healthcare industry and that of patients.

Realities ⬆ ⬇

The current definition of reality is crucial to understanding the new realities and how they are transforming people's personal and professional lives. We will explore various realities to contemplate and critically think about in relation to your current reality and foreseeable realities while imagining your future realities. However, we must remember that each new invention, innovation, novelty, and advancement the future brings will cast everything we describe in a different light. Every newly expressed idea, concept, or product will continue to shape our reality.

The realities we will review are those that most people refer to, such as AR, mixed reality (MR), extended reality (XR), and VR. However, our commentary will go beyond the obvious to evolving developments that continue to have a profound influence on our realities, such as telemedicine; big data; and the Internet of Things (IoT), Intelligence of Things (IoT), and Artificial Intelligence of Things (AIoT), including AI, robotics, smart devices, and haptics. These select technological enhancements are affecting our professional reality and have changed and will forever change the way we view the world of nursing and patient care.

Augmented Reality

Augmented reality (AR) is an interactive experience within a real-world environment that enhances real-world objects using virtually simulated or computer-generated perceptual information. It provides stimulation or input for multiple senses: olfactory; visual; auditory; haptic; and somatosensory, such as pressure, pain, and warmth. AR comprises apps; wearable technologies, such as glasses; and software that not only interacts with its users but also enhances their senses with data, information, audio, and images. The AR overlays are added to the users' existing environment or reality to enhance their virtual experiences.

AR innovations provide instantaneous access to patient data and information, which helps healthcare providers diagnose, create a plan of care, and implement the treatment regimen for their patients. As AR holograms infiltrate our reality, we can explore and manipulate these holograms with colleagues, such as in a learning space. AR holograms could be used to orient nurses and nursing students to a new work or clinical setting, such as a hospital. This orientation could be interactive and engaging by providing content that requires the active involvement of the learner as it teaches about equipment, patient rooms, and the physical layout of the healthcare setting. AR can overlay a patient in an exam room so that students can practice their patient engagement and interviewing skills. It can provide an anatomy or physiology overlay to explore and learn from as it brings objects to life right in front of students. AR can create a shared view, with everyone interacting with the same object at the same time. Even though a group may have a shared view, or AR object, are its members' realities the same?

Mixed Reality

Mixed reality (MR) is the blending of the physical world with the virtual world to create new environments and visualizations along the continuum from AR to VR. As students move from textbooks and two-dimensional images to the realities, they can now view anatomy from the inside out and explore it in three dimensions. Using MR decreases and even eliminates the use of cadavers. Nurses can practice complex procedures before they implement them with their patients. Has this already become the reality for some people in health care?

Extended Reality

Extended reality (XR) is an umbrella term where the X in XR can represent any letter so that the term covers all the forms of digitally altered realities that currently exist (i.e., AR, MR, and VR) and other immersive technologies yet to be developed. With XR, users can experience augmentation through total immersion.

Virtual Reality

Virtual reality (VR) simulates reality in a digital medium. VR has been used in health care for about 20 years. Schools of nursing are exploring and implementing VR in the education of the future nursing workforce. VR learning episodes provide opportunities for nursing students to practice skills and interviewing techniques in a safe environment and experience intimate and challenging conversations, such as discussing death and dying with their patients while the faculty member assesses their ability to empathize with and support the patients. VR can be used to allow nursing students to become patients and experience the patients' reality based on their health status. The patients could have visual impairments, schizophrenia, Alzheimer's disease, or other conditions that become the students' reality. As the students cannot see well or slur their words or experience hearing voices, they become immersed in the reality of the patients they will be caring for. How does this VR immersion affect the students' reality when they interact with the actual patients? VR can be used to provide nursing students with the experience of patient care while interacting as a member of the healthcare team. Think of a nursing skill and the reality of the experience in VR versus the reality of performing the skill while providing actual nursing care. Advances in VR technology have been made that have enhanced medicine, such as surgeons' using the patients' own anatomy to practice a VR surgery prior to the actual operation. In this case, how is the surgeons' reality affected, and does the surgeons' VR reality affect their physical reality when they are performing the actual operation?

| Box 25-1 A Glimpse of Patient Experiences in Future Health Care |

|---|

| As we go bounding into the next decade, we must consider human-technology interfacing as a confluence matrix where the human and the machine converge to create new realities. The humans are creating the software and machines necessary to enhance human capabilities. What does it mean to be human, and how are these lines blurring as our machines become intelligent influencers in this tenuous balancing act of a relationship? Because we are human, we tend to include the attributes of our humanness as we develop technologies. When technologies replicate, do they create images of themselves, and can they include human qualities? Must a machine behave like a human for us to accept it? How do we technologically enhance our bodies and minds without accepting the human-machine or human-technology integration? Is the machine or technology humanoid (i.e., appearance or character resembling a human)? Based on the number of enhancements, is there a point where we consider ourselves less human and more technology or machine? In the Six Million Dollar Man (a TV series that aired from 1973 to 1978), a man suffered severe damage during an accident and became the “bionic man.” This bionic man was created by the melding of machinery and software with human tissues while maintaining his intellectual capabilities and humanness, or the quality of being human (i.e., human being or a person). As technology invades our culture and healthcare delivery system, what are the implications for future healthcare workers dealing with enhanced human patients or enhanced treatment modalities based on technological advancements?

What can we expect as our healthcare reality in the 2030s? It is difficult to answer this question given the rapid technological advances we are experiencing daily. We will present their interpretation of what the advancements will bring to health care in the next decade. In business, there are BHAGs, or big hairy audacious goals. For informatics, we provide our BHAG, or big hairy audacious glimpse, into the future.

|

| Teenager: 3D Printing and Prostheses |

|---|

| Mari Jaynea is a 17-year-old female who lost her hand in an auto accident. She wears a prosthesis that allows her to hold or grasp items, but because she is an active teenager, she frequently breaks her “fake hand,” as she puts it. Her parents purchased a 3D printing device that can make her prosthetic hand for less than $80 and print it out in layers in about 5 hours. Once the prosthetic hand has been assembled, Mari Jaynea and her mom test it to make sure that it fits well and that there are no areas of irritation. Now Mari Jaynea can have a new prosthetic hand quickly and more cost effectively compared to scheduling an appointment at the clinic and waiting for technicians to create it.

Mari Jaynea and her mom have joined the e-NABLE Community (http://enablingthefuture.org), which spans 100 countries. They now create prosthetic hands for two children in need who are within one hour's drive of their home. Mari Jaynea wants to reach out and find additional children her family can help. Her mom says that when she hears the printer turn on, she gets ready to assemble because she knows that Mari Jaynea or one of these other children needs a new prosthetic hand. Mari Jaynea has also joined an international innovative team created for the purpose of enhancing the printable prostheses. She will be testing new ideas, from printing and assembly to fitting and use. Mari Jaynea and her mother are excited to explore how they can improve Mari Jaynea's capabilities, comfort, and durability.

|

| AN Elderly Woman, a Robot*, and a Smart Home |

|---|

| Amy is a 75-year-old female whose family is concerned about her staying in her own home alone. Based on her “forgetfulness,” as described by the family, she can no longer drive because she went to the grocery store and forgot where her car was parked and then could not remember how to get home. They purchased a self-driving car for her that has all of her favorite spots, such as her church, grocery store, women's club, and YMCA, in its navigational system. It is also programmed for her nurse practitioner, hospital, hospitalist, and imaging and diagnostic centers. This vehicle is voice activated and equipped with emergency information for police, fire, and ambulance. Amy has a radio-frequency identification (RFID) chip in her arm that is recognized by the car and her virtual assistive service robot. This robot has a well-developed sense of smell and borders on instinct in its ability to detect issues in its human counterpart, in this case, Amy. Therefore, it easily replaces a service animal for the elderly or for those who do not or cannot take on the responsibility of animal care but who need the service. When Amy was introduced to her robot, it reminded her of a “Brad,” so that is what she named it.

When Amy enters the garage, Brad accompanies her into the car. When Amy says “clothes,” the car takes her to the clothing store. She will not order her clothes online because she has always shopped for them in person. She is appreciative that the clothing store has remained. Brad accompanies her into the store and carries everything she purchases, and everything is charged as they walk through the checkout station in the store. Brad contacts the vehicle to pick them up, and the car moves to the exit. When it arrives and is within 3 feet of them, it opens the doors and trunk. Brad makes sure that Amy gets in the car and then stows the purchases in the trunk and enters the car. Amy hesitates, and the car asks her if she is ready to go home. Amy says yes, and they return home.

Brad reminds Amy that it is time to monitor her heart, eat lunch, and take her medication. Brad has Amy go to the bathroom and then relax. It then proceeds to monitor her blood pressure and heart rate and checks all of her peripheral pulses; it determines that everything is normal and that this information does not need to be sent to her nurse practitioner. After lunch, Amy reads for a half hour and then Brad guides her through her exercises. Amy had a knee replacement and was rehabilitated using the orthopedic surgeon's treatment plan that Brad implemented. She is now on an exercise regimen that Brad initiates and monitors.

Amy told her daughter that Brad tells her the day, date, and news every morning and then lists the activities they have scheduled; she sees the nurse practitioner tomorrow. She states that she does not remember why they are going to see the nurse practitioner tomorrow and asks Brad to explain. Brad states that they had a telehealth appointment last month and the nurse practitioner requests a face-to-face meeting when necessary and at least once every 2 months. Amy says that she remembers that but asks why she has to go. Brad explains to Amy and her daughter Amy's medical condition and the results from this past weeks' monitoring and compares these results with the findings from the past year to show patterns and trends in Amy's health status. Brad explains Amy's medication changes and most recent health status, within the past month.

Amy wants to go for a walk with her daughter and asks Brad to stay home while they go. Brad scans her RFID chip and activates her wearable monitoring system to receive feedback about Amy on her walk so that it can intercede if necessary. Amy's daughter thanks Brad and says to Amy that she is well cared for by her virtual assistive service robot. Brad interfaces with all the smart home internet-connected devices while Amy is out of the house. Brad contemplates what is on the agenda for dinner today after checking in with the smart refrigerator. Brad monitors all of Amy's smart home devices to check their status. These are the smart devices currently in Amy's home:

|

- A stove that turns off if she leaves the kitchen and it is unattended

- A refrigerator that can create shopping lists, track expiration dates, and even create recipes based on the ingredients on hand. This information is transferred to Brad, which monitors grocery needs and meals in relation to Amy's nutritional requirements and meal and snack preferences.

- A slow cooker

- A coffee maker

- A washer, dryer, and iron

- All the locks on the exterior doors

- A doorbell with full audio and video capabilities

- The thermostat

- All lighting throughout the home that sets the ambient lighting based on the detection of Amy's presence in each room

- All outlets throughout the house

- All blinds, shades, and curtains throughout the house, which respond to Amy's settings based on the time of day and her presence in the room or location

- TVs that are voice controlled by Amy and Brad

- The garage door opener

- All security cameras, with audio- and video-capturing capabilities

- A commode that opens and lights up when Amy approaches

- Monitors that sense electric surges and turn off appliances or water issues, such as freezing pipes, and turn off the water to avoid a ruptured pipe in the house and flooding

Once Brad has checked the house, which it does twice a day, and scans Amy's monitors to make sure she is fine, it receives some downtime while waiting for Amy to return from her walk. Brad does not get much downtime, though, because Amy pings it to tell it to send the car because she is feeling tired. Brad provides her coordinates to the car and climbs in to get on its way to her while remotely monitoring her vital signs. Brad helps her into the car and scans her appearance while monitoring her vital signs again. Amy tells Brad she's just tired and to get her home. Her daughter gets in the car and off they go. Amy walks into her living room and the lights turn on; then she asks the TV to tune to her favorite channel and sits in her recliner. The recliner moves to her programmed setting and elevates her legs. Brad asks her how she is feeling while monitoring her vital signs. Her vital signs remain normal, and Brad records the experience for their appointment with the nurse practitioner. Amy falls asleep, and Brad walks her daughter to her car. Brad enters the house, sets the doors to lock, and monitors Amy while she sleeps. *Reflecting on future care robots, such as Amy's virtual assistive service robot, Brad, does the robot's reality matter? What reality is programmed into the robot? If the robot is enhanced with AI, does it learn on its own and create its own reality? |

Telemedicine ⬆ ⬇

Telemedicine received a boost with the COVID-19 pandemic, which found healthcare providers scrambling to provide safe access to health care while maintaining quality and care continuity. Telemedicine necessitated regulatory changes that affected healthcare billing, and patients experienced a new reality using this healthcare access pathway. There are long-term implications for informatics, hospitals, providers, patients, and health technologies. Many view this reactive surge of telemedicine as the path forward, and it represents a paradigm shift in how care will be delivered beyond the 2020 COVID-19 pandemic. Reflect on these changes and the new realities generated from this evolution.

Big Data ⬆ ⬇

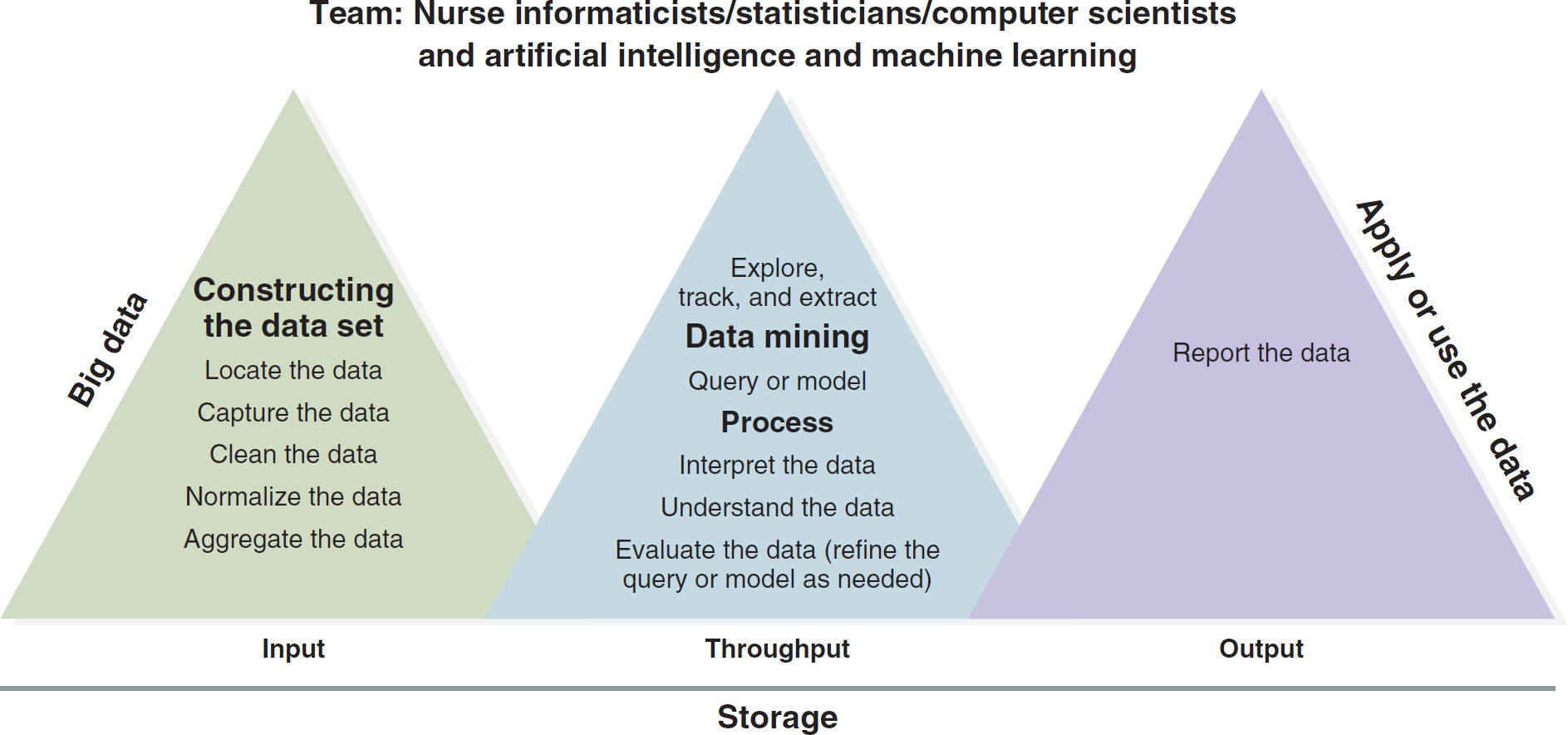

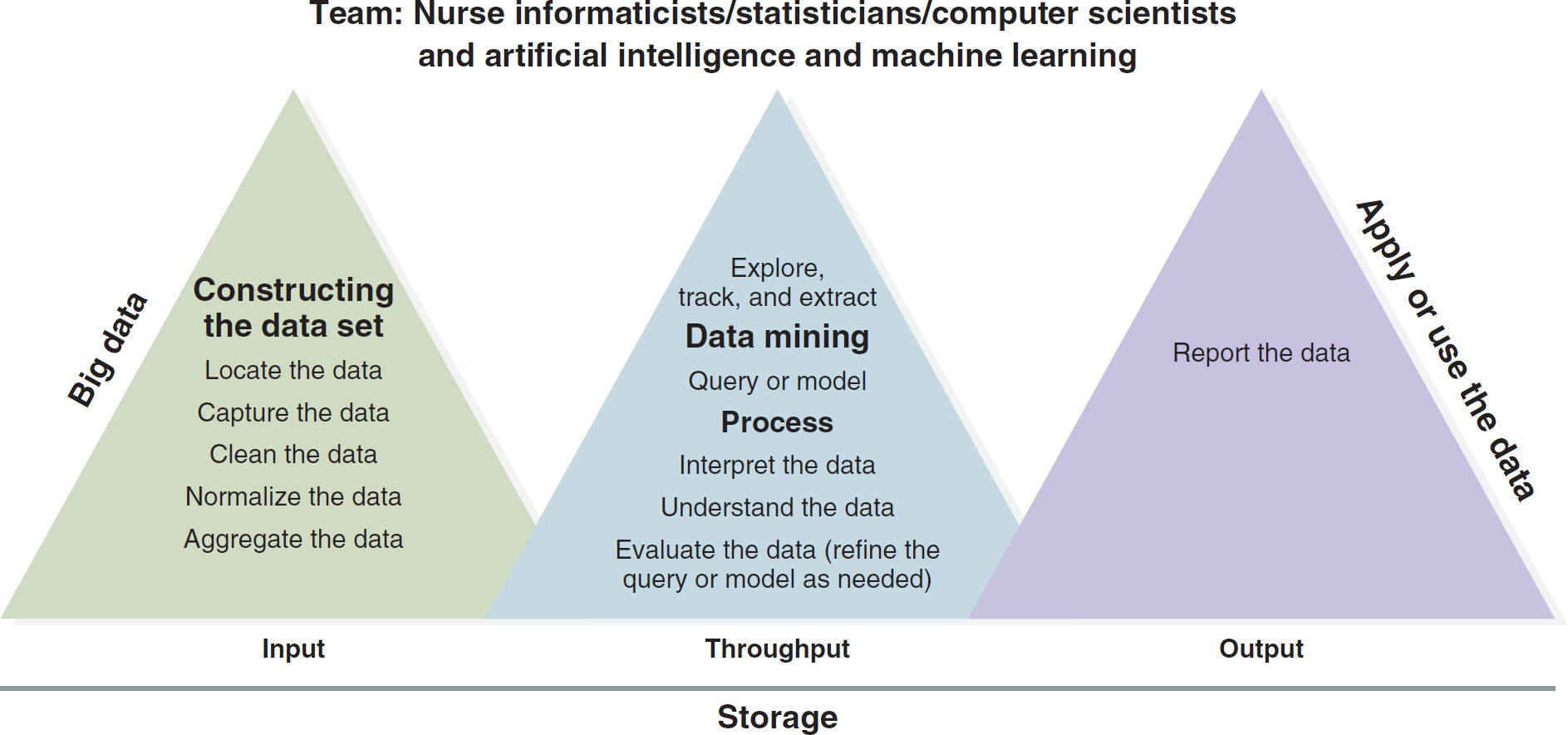

Big data is mined, analyzed, and synthesized by informaticists who advance big data science. The informaticists must have access to data sets that are significant and relevant in order to mine them. Data mining occurs during the throughput in the middle of the data processing cycle. The Big Health Data Life Cycle, which is depicted in Figure 25-1, shows the path the data travel and how they are processed or transformed, from locating and capturing the data to interpreting and understanding the data to the reporting and ultimate use or application they inform.

Figure 25-1 Big Health Data Life Cycle

A sequence of three interconnected triangles depicts the collaborative health data life cycle team, comprised of nurse informaticists, statisticians, computer scientists, and experts in artificial intelligence and machine learning.

The trio of triangles, symbolizing input, throughput, and output, collectively represents the concept of storage. Each triangle indicates the following. 1. Input: Ingesting big data involves constructing the dataset through the following steps: locating the data, capturing the data, cleaning the data, normalizing the data, and aggregating the data. 2. Throughput: Explore, track, and extract data through processes such as data mining, which includes querying or modeling. Further processing involves interpreting the data, understanding the data, and evaluating the data, that is, refining the query or model as needed. 3. Output: Apply or use the data and report the output data.

The nurse informaticist works with and often leads a team that typically consists of statisticians and computer scientists. Their ability to track and extract data and information in meaningful ways advances nursing knowledge and, when coupled with innovative, evolving technologies such as AI and machine learning, will have a significant effect on nurses' practice and the nursing profession. Harnessing the gems gleaned from big data with cutting-edge technologies, models, and theories results in new knowledge and improved patient care; big data is informing and sculpting healthcare delivery. Patterns and trends can be identified in the data, patient outcomes compared to those expected, and predictive analytics and forecasting explored. As our skills and technologies advance, what we can gather from big data will continue to expand and inform us. Describe how big data changes our reality and that of our patients.

Internet of Things (IoT), Intelligence of Things (IoT), and Artificial Intelligence of Things (AIoT) ⬆ ⬇

Technological advancements in science, telecommunications, robotics, and informatics capabilities in relation to technologies and big data continue to transform health care. The latest trail that is being blazed in the healthcare delivery system is from state-of-the-art, up-to-the-minute devices and the high-tech communication systems interfacing with and integrating them.

We know that the Internet of Things and AI are each self-sufficient in their own right and are potent technologies. The Internet of Things connects devices to the internet and to other interconnected devices that collect useful data and information. AI uses machine learning and intelligence to infer things and learn from its interfacing and interactions. AIoT is the blending of AI and the infrastructure of the Internet of Things to realize efficiencies and enhance human-technology interfacing and interactions. Through this powerful infusion, data management and analytics are improved. AIoT processes and transforms the data from the Internet of Things into meaningful information to inform decision-making. AIoT will be leading the way to empower the billions of smart devices and meet mainstream needs, including smart health care, smart monitoring, smart homes, smart self-driving vehicles, and other smart technologies that will contribute to society's health and lifestyles. AIoT will also assist with analyzing the data being processed. Our reality is changing because of the Intelligence of Things and AIoT, or applying AI to the Internet of Things, such as smart devices, monitors, and sensors; they not only communicate with us but also can interact with each other and even initiate actions based on data and information they collect from the devices in their Internet of Things, or interconnected network.

These devices can be proactive for our patients, which changes our reality. Typically, we react to patients who present with symptomology, whereas monitoring devices can be proactive when they detect subtle changes in the patient's condition, such as alerting patients to increase their intake of sugar or reaching out to the patient's healthcare providers in real time. Think of our reality in relation to information overload with the amount of data and information generated by humans now being augmented by AI-generated data and information. The AIoT infrastructure will allow us to realize efficiencies, enhance human-technology interfacing and interactions, and improve data and information management and analytics. We will consider AI, robotics, and smart devices because these advances are changing our world and our reality forever.

Artificial Intelligence (AI) ⬆ ⬇

Artificial intelligence (AI) is a disruptive innovation that has exploded onto the scene (refer to Chapter 19, Nursing Informatics and Nursing Education, to learn more about AI's educational effect). AI-enhanced devices and agents are ushering in a new era of health care that will radically inform and enrich patient care and improve patient outcomes. AI's effect is far-reaching and ranges from using AI algorithms to monitor, diagnose, and treat a diabetic's fluctuations in blood glucose levels with insulin to providing real-time assistance for healthcare providers with clinical interpretation. The potential of AI in health care is being realized in its ability to interpret and diagnose. Radiologists' work will be greatly enhanced because AI can more efficiently and accurately interpret scans and X-rays than radiologists can. AI has been able to diagnose skin and breast cancers more accurately. AI and machine learning can extend the healthcare workforce. Human intelligence (HI) will coexist and fuse with AI as both intelligences work together to overcome the other's weaknesses while enhancing strengths. Reflect on how you could work with AI agents in your nursing role. How will this synergy enhance patient care and your role? How are patients' realities changed when they are being treated by machines and not people, as in the case of patients with diabetes whose blood glucose is being monitored and assessed and whose treatment is initiated by their AI agent? Healthcare providers who are struggling with patients' cases can seek assistance with interpreting the patients' data and diagnosing them. How can we illustrate an AI-enhanced reality?

| Box 25-2 Cloud-Based Ecosystem |

|---|

| Meet Shandie, a woman with four children, a busy career, and an active lifestyle. She developed gestational diabetes during her pregnancy with her third child, but it did not resolve after the child was born and is worsening and difficult to manage.

She works with Kevin, her nurse practitioner, to monitor her diabetes, but they have not been able to manage it given her family, career, and active lifestyle. Kevin tells Shandie that he has been trying to keep up with the medical data, which are constantly being released, and often consults with her endocrinologist, but they cannot keep up. He asks her whether she has purchased the smartwatch, which he prescribed, to monitor her lifestyle, blood glucose, and insulin needs. Shandie says yes and that she just sent information to him and her endocrinologist while she was waiting to see him. He praises her for being able to do that and states that it will help add vital data and information to assist them to help her. Kevin reviews the app that she is using and informs her that it is an AI healthcare cloud app; this tool will allow her smartwatch to provide them with data and information so that they can review all of her healthcare encounters and the personal data that she inputs. Kevin stresses that this will provide meaningful insights for him and her over time so that they can best manage her diabetes and address issues as they come up in real time with the built-in glucose monitoring.

Because the AI app functions in a cloud ecosystem, the environment is multifaceted and brings in a wide range of data, information, and knowledge from doctors, nurses, nurse practitioners, researchers, significant others (e.g., those people she trusts to answer questions and help monitor some of the app's output), insurers, pharmaceutical companies, and support groups. As the AI cloud apps share de-identified data and receive others' de-identified data to analyze, the AI learns and shares that output with everyone accessing that app. This information helps Kevin and Shandie compare her diagnosis, treatment, and situational context with others. Blending powerful analytics and insights, the AI app is able to make the best-informed recommendations for Shandie's care; as new studies and information or knowledge emerge, they are analyzed and synthesized into the app's AI, meaning that the AI is learning, expanding its capabilities, and sharing the output.

As this app interacts with an individual's data set, such as Shandie's, it can continue to learn about the situational context affecting Shandie's care and how treatment regimens have affected her health and ability to maintain her chosen lifestyle. As the app matures and other apps are created in the system, the data, information, and knowledge available continue to increase and be processed by the AI, as it can do best, and the clinician oversight helps to leverage what the clinician or human can do best to synthesize the AI's findings, recommendations with Shandie's needs, and situational context. As apps evolve and enter the ecosystem, how will our patient care reality change?

|

| AI-Powered Health |

|---|

| Docus (2023) is an AI-powered health platform where you can “[t]alk to AI Health Assistant, generate your health report, validate it with a Top Doctor from the US & Europe” (para. 1). As more and more of these platforms become available to our patients, we must consider how much specific information they are able to access. It is one thing to seek information on the web about your condition or about general symptoms, but now patients can receive specific information about their contextual situation and about their own medical history, symptoms, and other factors that are relevant to them. In addition, the patient can choose to receive a second opinion from doctors from leading institutions from around the world. It is our responsibility to explore these platforms and help our patients navigate this new individualized information-seeking option. |

| AI Gives Paralyzed Woman her Voice |

|---|

| A “[b]reakthrough brain implant and digital avatar allow stoke survivor to speak with facial expressions for first time in 18 years” (Marks & Kurtzman, 2023, para. 1). This patient is working with pioneers exploring AI algorithms to recognize unique brain signals for speech. This is such a positive reality changer for patients who feel trapped without the ability to communicate. The patient featured in this article described it as “[l]ocked-in syndrome” where “you are locked inside a body where no muscles work” (para. 9). This exciting research uses an avatar to add a face and personalized this patient's voice based on a recording she made prior to her injury. AI-enabled communications for stroke patients and others who cannot communicate will be extremely important evolutions to follow.

|

Robots ⬆ ⬇

Robots are automated and/or smart machines that are programmed to complete the routine tasks typically done by a person. Robots are permeating our healthcare delivery system. They assist with surgery; care for patients in place of nurses; and assume recurring tasks for nurses and doctors, such as getting supplies, to increase the time doctors and nurses can spend with their patients. They can assist in the pharmacy and deliver drugs and help the aged live more independently, as in the example of Brad helping Amy. Brad and robots that resemble pets can help with the psychosocial interaction lonely people need without the maintenance requirements of a pet or the risks of taking in a roommate. Brad helped prepare meals and monitored Amy's medications. What reality is programmed into the robot? If it is enhanced with AI, does it create its own reality? Do you foresee robots replacing nurses or augmenting and supplementing care in a future reality?

| Box 25-3 How Will Patients Respond to Care Bots and Cyborgs? |

|---|

| Chondon, a patient, enters the clinic, and a voice says, “Welcome to the Natural Coastal Waters Clinic. Please take a seat, and a care bot, NCW1, which is a robot that can assist with patient care, will be assigned to you during your visit today.” Once seated, Chondon immediately sees NCW1 approaching him. It introduces itself as NCW1, the care bot that will be caring for him today, and asks him to accompany it to an exam room. On the way, NCW1 informs Chondon of his weight and height. They then enter exam room NCW1, the number of which corresponds to the care bot assigned to that room. NCW1 closes the door and turns to face Chondon; its chest opens, and a screen appears.

It says, “Welcome to our Natural Coastal Waters Clinic. To get started, please place the palm of your hand on the middle of the screen by placing it within the outline of the hand. Next, look directly into the soft blue light on the screen above your hand. I have your name as Chondon Smatty. Is it OK if I call you by your first name, Chondon?” Chondon says yes and what the robot just did is amazing. NCW1 says, “Chondon, we now have a retinal capture. Your weight and height have not changed since your last visit, and I have obtained a set of your vital signs, which have already been transferred to your electronic health record and your smartwatch. All your vital signs are within normal limits. I have also assessed your blood glucose level, and it is elevated. The top of the normal range is 120, and your level is 140. I see that you are here to consult with Nurse Practitioner Kavan. Do you have any questions at this time?”

Chondon says, “Since when have robots been used here at the clinic?” NCW1 messages Kavan that Chondon is in his exam room while answering Chondon.

“We were placed into service just last week. Our purpose is to help the patients and nursing staff. How do you feel about the care I have provided thus far?”

Chondon says, “I'm not sure; it's different interacting with a robot.”

NCW1 corrects him by saying, “Care bot.” Chondon smiles. Kavan enters the room to find Chondon and NCW1 chuckling together.

Kavan asks what he missed, and Chondon says, “I called it a robot, and it corrected me that it is a care bot. It's weird interacting with it.”

This is when nurses must support their patients because their experience is different from when they come in for a visit. As a member of the nursing staff in this clinic, how would your and the patient's reality change?

Now fast-forward into the future. Chondon goes to the Natural Coastal Waters Clinic to meet with his cyborg nurse, CEN 1 (Figure 25-2). Chondon says, “You look human!” to which CEN1 replies, “I am a bionic human, a cyborg-enhanced nurse, or CEN, and I am the first one here at the clinic, hence my name CEN 1. Chondon asks what a cyborg is, and

Figure 25-2 CEN-1

A three-dimensional depiction of a humanoid robot.

CEN 1 responds, “It is a shortened term for a cybernetic organism, cyborg. I have both biological (human) parts and technological or artificial parts that are electronic, mechanical, or robotic.”

Chondon interrupts CEN 1: “Why did you choose to do this?”

CEN 1 smiles and answers, “It was not by choice; I was a nurse who was in a terrible accident that left me without my right eye, left arm, and left leg. The doctors discussed my options of just having prostheses or having the ability to use my new limbs fully controllable by me. I jumped at the chance to have control and not have to remove and attach body parts. That is why you see the metal skin where my arm and leg are connected. They also had a smart eye that they could implant. My face has the partial metal skin covering on my upper right cheek where there was too much tissue damage, and I chose not to have multiple surgeries. My face looks the same other than the black steel covering by my right eye. I have some mechanical organs and an artificially intelligent pancreas.”

Chondon asks CEN 1 what its name was before the accident. CEN 1 states that “once my appearance was changed and I was listed as a cyborg, I wanted people to know I was a cyborg nurse, so my name is CEN 1; I feel as though I was given a new life after a devastating accident. I am thankful that we have the technology and science to do this. I can still practice as a nurse providing direct care to my patients. I know I confuse humans and care bots alike.” CEN 1 laughs.

“There are other enhancements that I can share with you, but let's discuss why you are here and review your data strip.” Chondon says, “OK, but I'll have more questions.”

As a member of the nursing staff in this clinic, how would your and the patient's reality change?

|

Smart Devices ⬆ ⬇

Smart devices (we would prefer another term, but “smart” is what is recognized in this reality) continue to invade our reality. If you have a smartphone or a smartwatch, how much do you rely on its capabilities to help organize your life? How much do smart devices communicate and share data with each other? Do you know how many steps you have taken today? Do you monitor your sleep habits, vital signs, and moods? Think back and reflect on your reality before you had these devices. In what ways did your reality change once you began using each device? Could you give up your smart gadgets? Our patients also use smartwatches. We can capture data from our patients' wearable devices, such as smartwatches. The data we collect and analyze from smart devices permit us to predict health problems and personalize treatment to support personalized medicine. We can treat patients before they even experience any symptomology. Consider all the smart devices you can describe and relate the types of data they can transmit to the healthcare provider. If we treat someone by providing them with access to an app, how does this reality affect our practice and change our and the patient's reality?

Haptic Technology: Reach Out and Touch Someone-Evaluating the Viability of Haptics ⬆ ⬇

Haptics, the science of applying tactile sensation or touch to human-computer interactions, are technologies that can replicate and transmit the real-life sensations of touch in the virtual world using, for example, proprioception (body position and movement), force feedback, vibration, touch, temperature, and grasp.

Haptics work in much the same way as graphics and audio to shape our reality in the virtual world. Our somatosensory system is stimulated by haptics, which pass on context and other information to our brains. We are all familiar with haptics found in our phones and other electronic devices. The vibrate feature on phones uses haptics to provide a tactile response, letting us know we have an incoming call or a text without disrupting the people around us. Telehealth employs haptics because touch is an absolute necessity during patient assessment and diagnosis.

The future of haptics in nursing informatics looks bright not just for learners but also for patients. Haptics can simulate the resistance of the skin, blood vessels, and muscle tissue for giving injections, which means the more the learner practices, the more muscle memory the learner gains, resulting in better technique and confidence with much less discomfort and pain for patients. Haptics help assess edema. Strength tests, such as squeezing, can also be accomplished through haptics. What will haptics enable learners to do in 2030?

Transcending Reality-Exploring the Promise of the Metaverse ⬆ ⬇

The term metaverse has been defined in different ways based on the definer's perspective. McGonigle and McGonigle (2023) stated that the metaverse is a concept and defined it as

the evolution of the latest frontier of the Internet that is a continuously accessible and available trisecting AI, the realities (MR: AR through VR), and our psychosocioeconomic digital and physical presence. The metaverse promises hyperconnected blurred or blended reality experienced through seamless immersive interactive exchanges on the devices we use from laptops to tablets to smartphones. (para.7)

In simple terms, the metaverse is a virtual or digital place where users and their family and friends can learn, work, play, shop, and remain connected no matter where they are physically.

The promise of the metaverse as it is conceptualized is to provide the digital space needed to conduct our lives; it is a virtual or digital place where users and their family and friends can remain connected no matter where they are physically to participate in education, business, work/career, entertainment, socialization, mental and physical health, and wealth perspectives and events. This promise must be accomplished with a strong feeling of presence and being interconnected in a welcoming space where each user can control their own access and experiences. Users and businesses will be able to take themselves as their identities, assets including monetary assets, clothing, jewelry, furniture assets, and their experiences wherever they want to, and the digital spaces will persist or remain even when there is no one in them.

Refer to Box 25-4. What do you think of ChatGPT's description of the metaverse to a nursing student?

| Box 25-4 AI ChatGPT Describes the Concept of the Metaverse to a Nursing Student |

|---|

| Dr. McGonigle asked ChatGPT, “How would you describe the concept of the metaverse to a nursing student?”

ChatGPT responded, “The concept of the metaverse can be described as a virtual space or world where people can interact with each other in a digital environment. It is a fully immersive, interactive, and interconnected virtual world where individuals can engage in activities, socialize, and even conduct business. It is essentially an online extension of the physical world where people can create and customize their own avatars and explore a vast and diverse digital landscape.

“For a nursing student, the metaverse has the potential to be used as a tool for healthcare education, research, and even patient care. In the metaverse, nursing students can participate in virtual simulations to gain hands-on experience with complex medical procedures, observe clinical scenarios, and learn from healthcare professionals in a safe and controlled environment. It can also be used as a platform for telemedicine, providing access to healthcare services to people in remote or underserved areas. Overall, the metaverse presents an exciting opportunity for the nursing profession to innovate and improve patient care through technology.”

|

The Four Foundational Pillars of the Metaverse ⬆ ⬇

To understand the metaverse, one must begin with the four foundational pillars of the metaverse: virtual environment, user experience, economy, and technology. Each pillar represents the fundamental elements or building blocks that make up the metaverse. These pillars are the key components that enable the creation and operation of the metaverse, and they play a critical role in shaping the overall user experience and moving us toward realizing the promise of the metaverse.

The first foundational pillar is the virtual environment that encompasses virtual real estate, infrastructure and connectivity, interoperability, continuity, persistence, and content creation and distribution. Everything has to do with the virtual space that supports open experiences. These environments can range from fully immersive 3D worlds to simple chat rooms.

Pillar 2, the user experience in the metaverse, is highly immersive and interactive and offers users a seamless and interconnected virtual world. Users can impact and be impacted by the metaverse. The user experience is engaging, encompassing avatar and object personalization, social and community presence and belonging, education or learning, health, wellness, and work experiences. Users can interact with a vast array of digital or virtual content as well as other avatars. AI will replace human-controlled actions and interactions with computer-controlled automated actions and interactions. AI avatars can learn gestures, body language, and language-specific conversational exchanges. AI avatars and objects can inform and assist people so that they can complete their tasks in the metaverse. Content is the crucial component of the metaverse, providing users with immersive and engaging experiences that can be user generated. Content is important since it “contains story reality, immersive experience, and conceptual completeness” (Ziaul, 2022, para. 19). The content is what the users want to experience and the reason they enter and interact within the metaverse. As users generate the metaverse spaces, such as buildings, campuses, cities, houses, hospitals, offices, meeting spaces, clinics, streets, museums, or other assets, they choose the look and feel of the areas, how they are organized, and what services will be supplied. As people create their avatars with the look and characteristics they want for their avatar's appearance to represent them, the diversity of perspectives and culture will shape the community of the metaverse. Users or people as avatars are the subjects and the realities created within the metaverse and are the means for linking and connecting the avatars in the metaverse and the users in the real physical world. Users can engage in various activities such as socializing with other users, attending virtual events and concerts, playing games, exploring virtual spaces, and conducting business transactions.

The third pillar is the economy or virtual economy and commerce. Just as people can buy and sell goods and services in the physical world, they can do so in the metaverse, including the creation and sale of virtual goods such as clothing, furniture, paintings, and other digital items. In addition, virtual currency could be used to facilitate these transactions and potentially even be exchanged for physical currency. Blockchain is a digital ledger or database shared across a large network of nodes or computers. The nodes work together to authenticate and verify the transactions that are then stored and chained together in encrypted blocks of digital asset data. The more nodes that are running the blockchain, the safer and more secure it is since a hacker would have to hack more than half of the nodes to be able to make changes. Therefore, the blockchain forms a chronological verification for the data where the digital assets are distributed but not copied or transferred. Blockchain can support the decentralized infrastructure of the metaverse by providing extended storage capacity, trusted powerful data processing and authentication nodes, and interoperability across metaverse projects through seamless interactions and the sharing of related data, information, and resources. Commerce such as advertising and marketing will increase as people spend more time in virtual spaces. This provides opportunities for companies to market and sell products and services to the users. This could include targeted advertising based on user behavior and interests as well as sponsorships and product placements within virtual environments.

Pillar 4 represents technology, and it is constantly evolving and improving. Technology is essential for creating a truly immersive and engaging virtual environment. The technologies include crypto wallets, point of sale, avatars, display technologies, digital assets, digital twins, digital representations, blockchain, 3D reconstruction, computer programs, VR and AR, AI and machine learning, cloud computing, gaming and entertainment, IoT, and the Internet of Everything (IoE). The metaverse acts as a 3D user interface to the IoT devices, making possible personalized IoT user experiences where the user can make data-driven decisions with minimal training and expending minimal mental energy (Loth, 2022). The IoE is the intelligent connection of people, processes, data, and things where objects have sensors to detect, measure, and assess their status. The IoE affixes intelligence to the IoT and extends its communications from machine to machine and machine to people or users. Computer programs are those needed for the operation of the metaverse but also those that recognize and generate sights, scenes, and objects; recognize and synthesize sounds and speech; and output and render motions. The term 3D reconstruction refers to the technology that supports the creation of photorealistic objects capable of capturing the realism for virtual spaces. Using 3D reconstruction, a company could create a virtual showroom for its cars where users can explore and even test-drive a vehicle.

These technologies will continue to play a crucial role in the development and growth of the metaverse.

These four pillars are the foundation supporting the move toward realizing the concept of the metaverse where users will be able to seamlessly move from one virtual world to another within an interconnected metaverse. It is not possible yet, as we currently have individual metaverses.

Use Cases: How the Metaverse Is Affecting and Will Affect Health Care ⬆ ⬇

Individual users, as well as organizations, will have a profound effect on shaping the metaverse as they enter, explore, and demand more capabilities. To attract the users, the metaverse must be compatible with the user's social values, providing social meaning and the situational context for them. A metaverse might not be the real world but can elicit tangible emotions and feelings. For the metaverse to approximate the real world, the interactions must be seamless and concurrent within an environment with presence. Those born from the mid- to late 1990s through early 2010s are the upcoming generation, Gen Z, and their social values indicate that they want to move through the realities of their lives with their online and offline persona or presence being the same.

The following use case examples (Table 25-1) demonstrate how educational, healthcare, and insurance-based organizations are entering and pushing the limits of the metaverse technologies to provide innovative, immersive, and engaging educational, clinical, financial, and recreational experiences in a secure environment that maintains privacy. Time will tell how far the metaverse can go under the influence of the enterprises and their constituents.

Table 25-1 Metaverse Use Cases | Education/Learning | Practice/Care |

|---|

Nursing and other healthcare professional students | The future healthcare workforce must be exposed to the latest technologies in their educational journey. The use of immersive simulations representing different settings, patient populations, and technologies must be used to prepare a career-ready graduate entering the future healthcare workforce. These same immersive settings can be used for telehealth experiences with actual patients. Students will learn how to interact with, navigate, and harness the power of the metaverse. Use cutting-edge MR and AI disruptive technologies to prepare practice-ready graduate nurses for the technology-laden clinical settings and for high-stakes, real-world scenarios. Create digital twins of actual campus settings or hospitals to help students become familiar with their learning settings. | Immersive simulations not only allow practice time prior to entering the actual clinical arena with real patients but can also expose students to infrequent patient presentations and events that cannot be done in the physical world such as disaster preparation. Provide clinical experiences using immersive learning simulations. Just as we seek clinical placements for our learners, we can create immersive environments that mimic our clinical settings to provide the necessary learning experiences. - Provide patient interactions through immersive VR settings to prepare students to interview and care for their actual patients.

- Integrate competency-based experiences and assessments using VR and AI to evaluate competence and practice readiness, from clinical judgment and decision-making through performing select procedures in a safe environment without risk or harm to patients (American Nurses Foundation, n.d.).

- Immersive experiences directed at the affective domain to teach sensitivity and empathy where learners become the patient to really experience their situational context (Brown, 2022).

- Use AI-enhanced avatars as standardized patients.

- Develop infrequently seen patient presentations using VR and AI for practicing nurses so that they can remain competent to provide setting-specific care.

|

Healthcare professionals | Immersive simulations can be used to maintain professional competency in the healthcare workforce not only in their clinical knowledge and skills but also in their knowledge and skill in the use of emerging technologies. | Enhanced patient safety. Immersive learning episodes can virtually simulate patient care encounters, allowing professionals to practice and hone their skills so that errors are reduced and time to treatment is expedited. In the surgical suite, surgeons can do the following: - Explore the patient's own mapped anatomy using VR and practice the surgery prior to operating on the actual patient.

- Meet with other specialists in a virtual operating suite to collaborate and practice an upcoming surgery or during the actual procedure.

Nurses can do the following: - Facilitate pain reduction through the use of VR technology instead of using medication.

- Distract patients during long and/or painful procedures to enhance patient comfort.

- Provide patient care by introducing apps such as those that help patients remember when to take their medications.

- Provide immersive learning experiences for caregivers to prepare them to care for their significant others after discharge.

- Introduce patients and their significant others to AI-powered agents that can help them monitor and improve their health status.

|

Patients | They can experience immersive learning episodes in consultation with their healthcare providers. They can acquire and use apps and AI agents. | Seek care via telehealth visits and consultations with healthcare providers they would normally interact with as well as top nursing and medical experts from around the world as the metaverse restructures, redesigns, and reimagines the provider-patient relationship and experience. Opportunities for wellness include diet; stress relief; fitness initiatives; and cues for treatment compliance, including medication dosage and time reminders. |

Healthcare settings | Metaverse hospital systems could provide virtual offerings that mirror brick-and-mortar facilities for patients and healthcare providers. | Metaverse hospital systems could offer the following programs using MR: - Telehealth

- Wellness

- Educational episodes that not only foster patient-provider relationship building but also provide just-in-time learning for both healthcare professionals and patients

- Collaborative opportunities that enhance the healthcare team as well as the provider-patient dyads involvement

|

Financial | Cryptocurrencies will be established by - hospitals for patient fees when accessing virtual and/or physical care; and

- learning organizations for student fees and faculty/staff salaries.

| Insurance companies must - determine how they will track, manage contracts, manage claims, and reimburse for metaverse or virtual healthcare visits in relation to how this is done for physical care episodes;

- establish specific coding for the metaverse virtual sessions; and

- use their own avatars to serve their customers by

- approaching those insured and companies in the metaverse; and

- providing immersive experiences such as procedure options and hospitalizations.

Learning organizations must do the following: - Develop cryptocurrency processes for collecting student fees and paying faculty and staff salaries.

- Petition financial aid to consider and support metaverse offerings for their students.

|

Privacy and security | Blockchain will be used to protect records and data. | Both healthcare and educational organizations will use blockchain and other technologies such as facial recognition to protect, secure, and manage the sharing and exchange of patient data with healthcare providers and third-party payers and student data. Encrypted AI algorithms can recognize the patient and/or student. |

Challenges Facing the Realization of the Metaverse ⬆ ⬇

As we enact use cases and strive to realize the promise of the metaverse; persistent accessible hyperconnected psychosocioeconomic digital and physical presence; or where we can learn, work, play, and shop no matter where we are physically, there are challenges that we must recognize and be able to overcome. This brief discussion will begin with the challenges facing everyone using the metaverse, including nurses, and will include nursing role-specific considerations (Table 25-2).

Table 25-2 Nursing Role-Specific ChallengesNursing Role | Challenges |

|---|

Nurse educator | Nurse educators must learn about the metaverse and its disruptive capabilities in order to have input and shape this new frontier by analyzing and synthesizing the evidence surrounding the metaverse pedagogy. They must identify and intentionally incorporate the best educational practices; immersive learning experiences; and significant, meaningful interactions to advance and develop an educational or learning metaverse. These educators must be able to help their administrators and other educators gain the expertise necessary to drive and harness the metaverse's teaching and learning potential. To accommodate learners with disabilities, they must find ways to address disparity with technological enhancements. If they provide a VR experience that some of the students cannot access and complete, how do they develop an alternative assignment that is comparable to the immersive and engaging VR episode? They must keep in mind that the learning must be equitable and of high quality but that it does not have to be equal. |

Nurse in clinical practice | Nurses in clinical practice must develop the expertise necessary to - develop metaverse solutions that not only improve patient outcomes but also enrich the nursing profession;

- assist other healthcare professionals with patient-care strategies for the metaverse; and

- help their patients understand and be able to navigate the metaverse to receive all three levels of prevention: primary, secondary, and tertiary.

|

Nurse researcher | Nurse researchers must find ways to engage with and study patients as they experience the metaverse. How can researchers truly track patients as they move about the metaverse? How will patients seek care? Will they experience the levels of prevention from different entities in the metaverse? Will they want or demand care in the metaverse versus physical care? Will there be separate institutional review boards (IRBs) for the virtual metaverse side of the institution versus the actual institution? Can one IRB approval be accepted by more than one virtual institution in the metaverse or between the real and virtual institutions? |

Nurse informaticist | It is imperative that nurse informaticists protect the security of patient data and information in the metaverse. As they rely on technologies and seamless transitions in the metaverse, they must remain vigilant in finding solutions that maintain their patients' privacy. It is important for them to - find ways to streamline digital procedures;

- constantly inform policymakers on the updates necessary to keep pace with the evolution of the metaverse;

- prepare others for accessing, entering, and using the metaverse to enrich their personal and professional lives, especially nurses and other healthcare professionals providing patient care and supporting metaverse-based care initiatives;

- realize that hardware plays a key role in the immersive experience and can be an obstacle to reaching the full potential of the metaverse as well as collaborate with hardware vendors to advance their products to meet the needs of the evolving metaverse in relation to patient care and healthcare professionals, compentency;

- collaborate with software vendors to develop software programs that are needed to interface with hardware and advance the metaverse frontier; and

- actively participate in the efforts necessary to realize the promise of the metaverse for themselves, other healthcare professionals, and patients and their significant others.

|

Nurse executive | Nurse executives must - learn everything they can about the metaverse and how it will impact their practice settings;

- afford professional development to the nurses under their supervision;

- create collaborative interdisciplinary teams to address the needs of their practice settings, especially as they relate to interoperability and the ability to securely share data and information;

- advocate for the commerce of the metaverse with their administrative peers; and

- advocate and prepare for the commerce of the metaverse with the integration of blockchain technology and cryptocurrency to secure monetary exchanges from patients and third-party payers.

|

The relationship between the IoT and the metaverse is becoming evident since one of the greatest challenges for the metaverse lies in its ability to access, correlate, and map data and information from the real world and meld it into the digital or virtual reality. This data and information must have meaning and be secured, accurate, organized, and available synchronously, in real time. Since the IoT has been around and in use for several years, there are hundreds of devices, including cameras, wearables, and sensors, that are available and ready to be used and incorporated into the metaverse. All nurses must assist policymakers in expanding their policies to handle the rapid evolution of technologies and capabilities that are and will be afforded as the metaverse develops. Policies typically do not keep pace with technological evolution and capabilities, and the evolution of the metaverse is no exception. For example, the Health Insurance Portability and Accountability Act (HIPAA) guidelines have tried to keep up with the expansion of telehealth and mobile computing; however, the metaverse has now entered the patient care arena, and lawmakers will need a wide variety of expert input, including from nurses, to help them understand and modernize the necessary guidelines based on the abundance of data and information in the metaverse. In addition, governance of the metaverse will be essential, which requires the establishment of a set of unified rules. Copyright and ownership issues must be addressed legally. Software and hardware vendors need help in understanding the metaverse frontier to create the products, programs, and devices that will meet the users' current needs and current state of the metaverse with an eye to the future. As with any digital platform, malicious activity and attacks that breach security will be an ever-present threat that could cause ethical issues, loss of data and information, invasion of privacy, harassment, and intentional human rights infringements, to name a few. With the metaverse capabilities, users might suffer risks of physical harm from the prolonged use of wearable technologies, especially the HMDs or headsets, during their immersive activities. As with any socially interactive space, there are risks of emotional and psychosocial harm, whether intentional or unintentional. When dealing with a new frontier, everyone must be vigilant to maintain access and equity. Since the metaverse is presenting us with an enormous evolution of the internet, issues with interoperability and scalability must be recognized and addressed. For the metaverse to be viable, we must have the ability to exchange, use, and share data and information on a grand scale and to handle the expanding volume and workload. AI agents will need to be enhanced to improve and personalize the user experience by providing meaningful just-in-time assistance or information. Infrastructure improvements must be made to ensure reliable and persistent availability. There must be a secure system in place to be able to make payments for services or products in the metaverse. Blockchain and cryptocurrency (i.e., a method of digital payment using blockchain technology) must be implemented in which blockchain is a digital ledger that overcomes the weaknesses of centralized data storage by providing a secure means to create, own, and monetize decentralized digital assets.

The weaknesses and threats in the metaverse become challenges that must be eliminated, such as confidentiality issues, interoperability problems, privacy invasion, security breaches, faulty navigation, and seamless transitioning between the real world and the virtual or digital world as needed. There are many challenges to address as the metaverse evolves. It is important to keep an eye to the future while being grounded in the present as we strive to have the metaverse approach real-world exchanges, the seamless and concurrent interactions gained through presence, interfaces, and connections. As the metaverse mimics the real world, it can elicit or cause real emotions and feelings as people are immersed and present in their digital experiences. As more nurses and nurse informaticists learn about, research, and experience the metaverse, they must realize the effect they can have in shaping this new frontier for improving patient outcomes, enhancing their professional development, and advancing the nursing profession. Nurses and nurse informaticists must educate other nurses, patients, and the healthcare team as to how to leverage the benefits of the metaverse. Nurse informaticists must assess the effect of this new frontier on their own practice setting while helping other nurses and healthcare team members understand the technologies, functionality, and capabilities of the metaverse so that they can enhance their practices and support their patients. It is imperative that we determine how the nursing informatics specialty will influence and be affected by the metaverse. As we push the metaverse envelope and continue to create the cutting edge of this frontier, we must always be vigilant against security and privacy issues, access and entry equity, and persistence. It is every professional's responsibility to access, enter, and navigate this new evolution of the internet so that they can harness its potential for the benefit of the patients under their care. Nurse informaticists and nurses must recognize and address the challenges as they arise during the evolution of the metaverse frontier.

In conclusion, the idea of the metaverse is not new, but technological advancements have brought about changes in our capabilities, enabling more realistic virtual worlds moving toward realizing the promise of the metaverse. Second Life, largely regarded as the first metaverse, was launched in 2003. Its features are too robust to cover here. However, it built the foundation from which the new metaverses are constructed since they employ much of the same features, from spatial audio to interactions with others. Spatial audio is the capability to direct how the user experiences sound to mimic the real world. Educators, business owners, and everyday people enjoyed the Second Life metaverse to work, make money, or relax from real-life stressors. Rumors on the internet suggest an update is coming for this older platform, enabling the use of VR along with today's advancements.

Metaverses are designed to extend the users' reality; they can replicate reality in a virtual environment that it is as close to the real world as possible, thus allowing users to interact as they do in the real world while providing a safe environment. As our capabilities are enhanced, will we see more genuine actions, such as the use of haptics for handshaking and other greetings, incorporated into the metaverse to enhance realism? What other experiences can we expect to see in the next 3 months, 6 months, 1 year, and 2 years?

Summary ⬆ ⬇

Throughout this text, you have explored the field of nursing informatics and its effect, influence, and transformative powers in the healthcare arena, patient care innovations, and the nursing profession. As we are informed through the use of nursing informatics and expand our knowledge and technology-infused practice, we affect our reality, the reality of the healthcare industry, and the reality of our patients.

Through the application of knowledge, technologies, and nursing informatics, disruptive forces transform reality and disrupt the healthcare industry. We must make sense of and meet the challenge of this disruption. Personalized learning is disrupting how we conceptualize nursing curriculums and learning episodes. Forces beyond our control, such as the COVID-19 pandemic, disrupted our reality with governments mandating social distancing, quarantining, and isolation. In addition, disruptive innovation has changed the healthcare landscape.

Our reality is described and experienced through our own perceptual screen. We now have tools that provide us with different realities that we experience to the extent that we engage with them. Our reality related to health care and nursing will continue to be shaped by advancing knowledge and technologies.

The metaverse is not just a new trend or an improvement in existing technology. It is a paradigm shift in content consumption, which nurses and nurse informaticists must lead. The metaverse is emerging as an immersive technology with enormous potential for optimizing patient care across the entire healthcare continuum. We encourage everyone to join as participants in new paradigms that will disrupt and influence the future of health care and our professional and personal lives. We must participate so that our practice settings, patients, and profession have a place at the table. The metaverse is composed of many different elements in which the users' input and creativity are essential. It is believed that the future does not depend on the developers as much as the users, who can actually shape every facet of this new internet frontier. There will be ongoing challenges and issues that we must address since there will be many new, shifting dimensions of the metaverse. By anticipating and preparing to mitigate potential pitfalls and creating a patient-centered collaborative setting, healthcare providers in the metaverse will generate better expectations for this immersive and unparalleled frontier as they engage their constituents and colleagues to improve healthcare delivery. Of course, we have only scratched the surface, but we wanted to give you a glimpse of how our professional and personal lives will change with the innovative disruption in our healthcare arena that is challenging our paradigms and rapidly creating new ones-new ways of thinking, working, and learning.

Is reality as simple as how we as humans interact and make sense of different phenomena? If the phenomena include technology, how do these phenomena change our frame of reference and affect our reality? Do we see the technologies as mere tools we use and then return to the reality before their use, or do they truly become part of our reality and influence our worldview? Just as the concept of the metaverse has risen to the forefront and AI exploded onto our digital landscape, what will disrupt our reality next?

We would like you to contemplate the following notions:

- What will our reality be as a

- healthcare professional,

- patient,

- layperson,

- learner preparing for entry into the workforce, or

- lifelong learner practitioner?

- Can we share our realities?

- How will we share our realities?

- Can we experience a shared reality?

- Will each setting have its own reality composed of the collaborative realities of the healthcare providers and the patients and the knowledge and technologies they use? Will each person have their own reality created by this interaction? What are the commonalities? What causes the differences?

- How will informatics influence, create, support, evaluate, and revise the realities of the future?

- What will you do to influence, create, support, evaluate, and revise the realities of the future?

- Just as we are concerned about access to health care, what about access to the realities?

- Will there be levels of reality and immersive depths?

- How will we prepare for these realities?

- In our personal lives, how will our realities be defined? Will they be perceived, constructed, created, crafted, designed, interpreted, rethought, or shared?

- Do we have multiple realities? Can there be multiple realities throughout a day, such as the reality of work and the reality of transportation home, and then, when we return home, the reality of being a parent, roommate, daughter, son, and so on? Do we move in and out of realities, or are all of these realities our total reality? What about when you don your headset?

- Will AI create our reality for us?

- Will we realize the promise of the metaverse?

- How do the individual realities of nurses transform the reality for nursing?

- How do nurses realize a transformed reality for health care?

| Thought-Provoking Questions |

|---|

- What is your current reality? Describe reality from your perspective and how your reality affects your patient care.

- What will your nursing reality look like in 1 year, 5 years, and 10 years? Provide a detailed description of how your reality will change.

- What will the reality be for our patients entering the healthcare system in 1 year, 5 years, and 10 years? Provide a detailed description of how their reality will change.

- Just as we have disruptive innovation, do we have disruptive reality? If so, describe it in detail.

- Reflect on AI and its effect on reality. Can and will AI craft our realities for us? Is AI part of our realities? Explain your answers in detail.

- Assess the concept of the metaverse. What is the reality of the metaverse, and how can we realize its promise?

|

References ⬆